The whole web on 60+ FPS: how does the new renderer in Firefox got rid of the slowdown

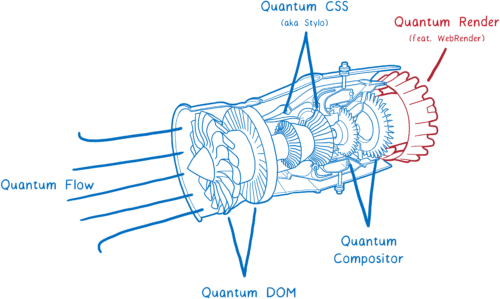

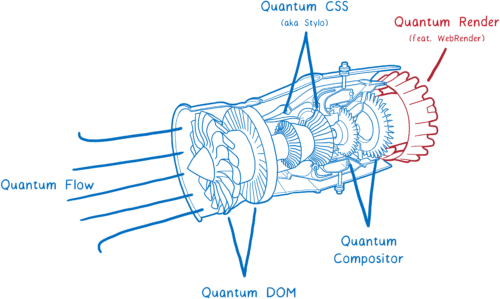

Before the release of Firefox Quantum less time. He will bring many improvements in performance, including the ultra fast engine CSS which we borrowed from the Servo.

But there is another bigger part of Servo technology, which is not yet part of the Quantum Firefox, but will soon enter. WebRender is a part of project Quantum Render.

WebRender is known for its exceptional speed. But the main task — not to speed up rendering and make it more smooth.

In the development WebRender we set the goal that all applications run at 60 frames per second (FPS) or better, regardless of the display size or the size of the animation. And it worked. Pages that puff to 15 FPS in the current Chrome or Firefox, fly at 60 FPS when running WebRender.

As WebRender does it? It fundamentally changes the principle of operation of the rendering engine, making it more like a 3D engine game.

We will understand what it means. But first...

the

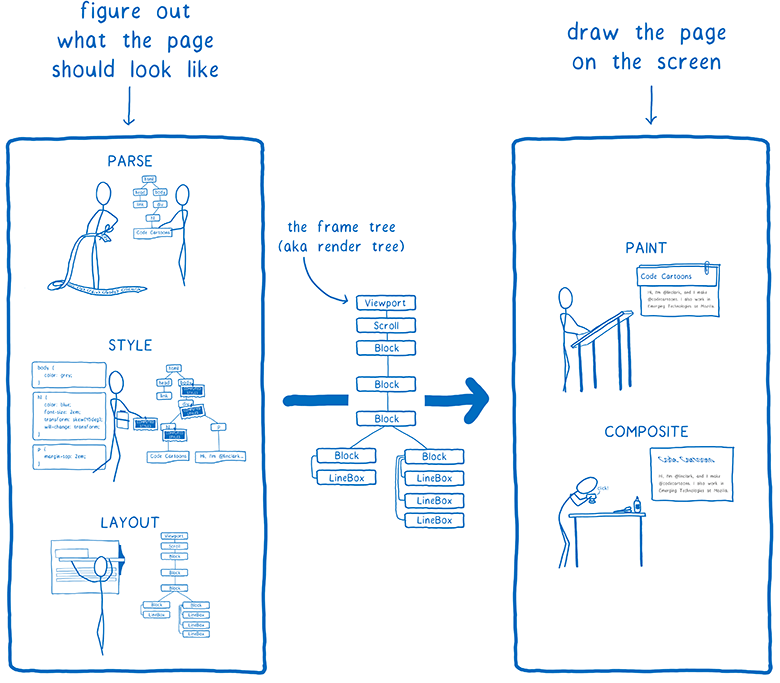

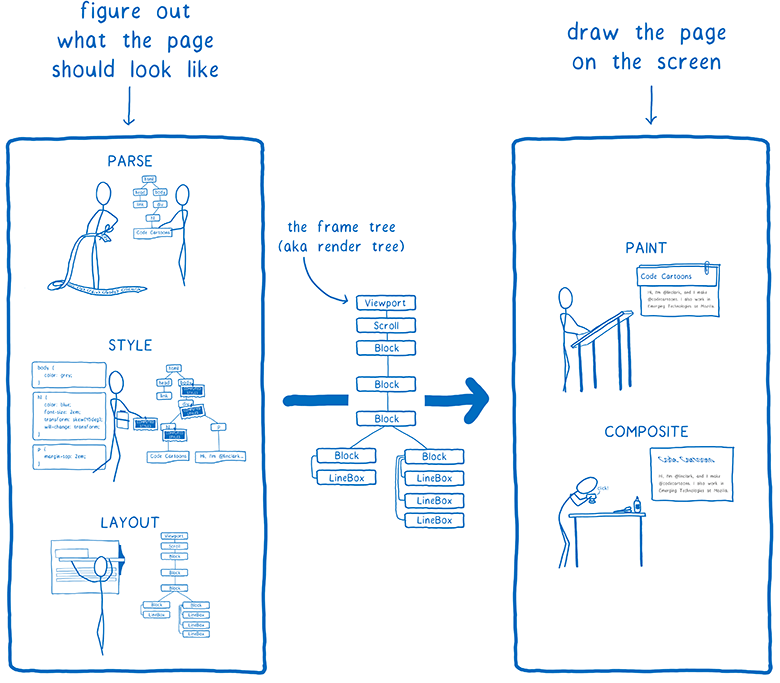

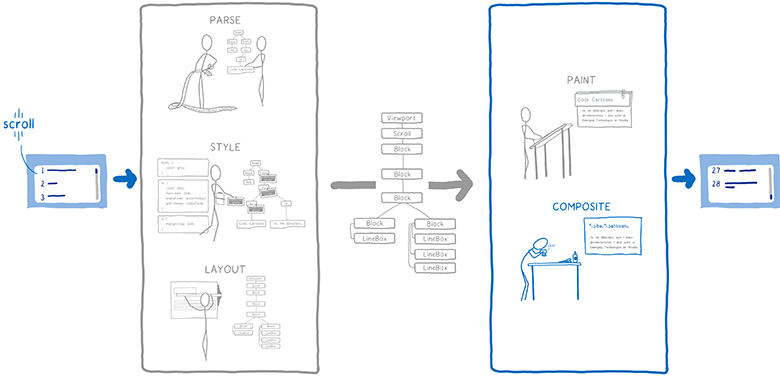

article on Stylo, I explained how the browser goes from the parsing of the HTML and CSS to pixels on the screen, and most browsers do it in five steps.

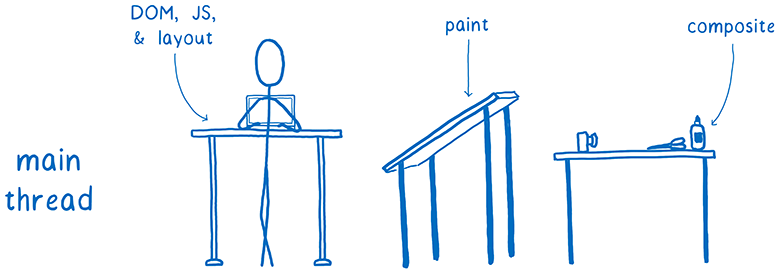

These five stages can be divided into two parts. The first of these is, in fact, a plan. To plan the browser parses the HTML and CSS, taking into account information like the size of the viewport to find out exactly what to look for in each element — its width, height, color, etc. the End result is what is called "tree frames" (frame tree) or "tree visualization" (render tree).

In the second part — drawing and layout — kicks in the renderer. He takes the plan and turns it into pixels on the screen.

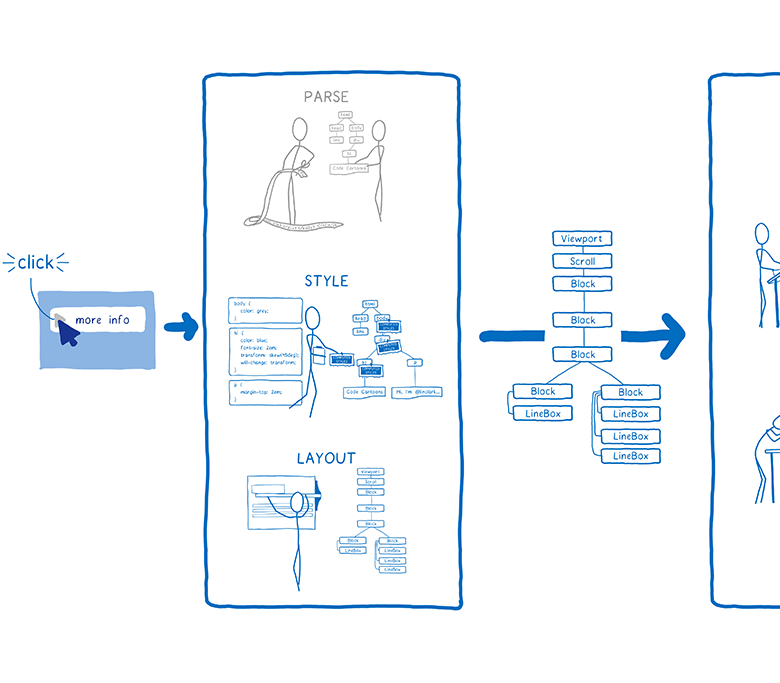

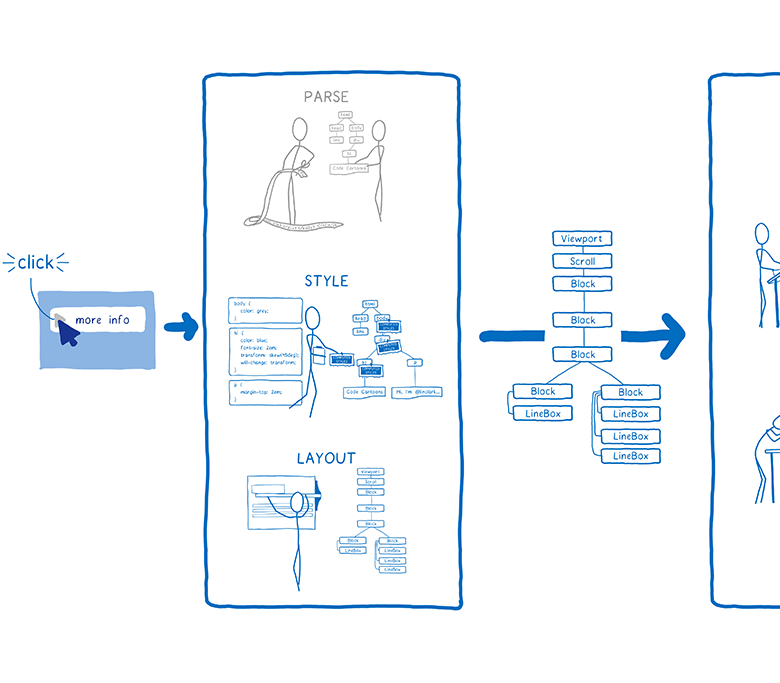

But the browser is not enough to do it only once. He has again and again to repeat the operation for the same web page. Every time the page something changes- for example, open div to switch the browser again you have to repeatedly run through all the steps.

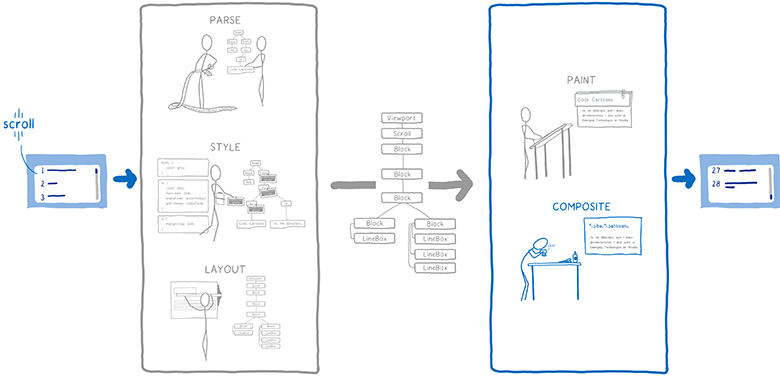

Even if the page nothing changes — for example, you just do scrolling, or select the text browser still needs to perform rendering operations to draw new pixels on the screen.

Below the scrolling and the animation was smooth, they must be updated at 60 frames per second.

You could have previously heard that phrase — frames per second (FPS) — unsure what it means. I present them as a flip book. It's like a book with static images that you can quickly browse through so that it creates the illusion of animation.

To animation in this flipbook looked smooth, you want to see 60 pages in a second.

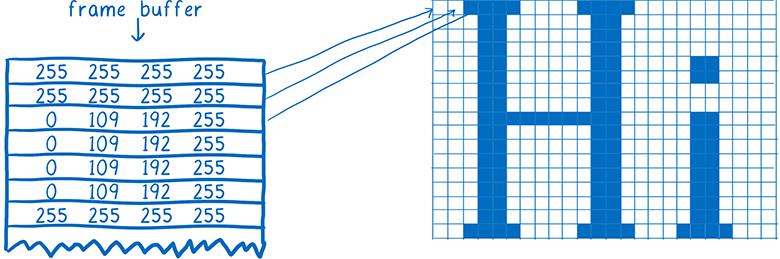

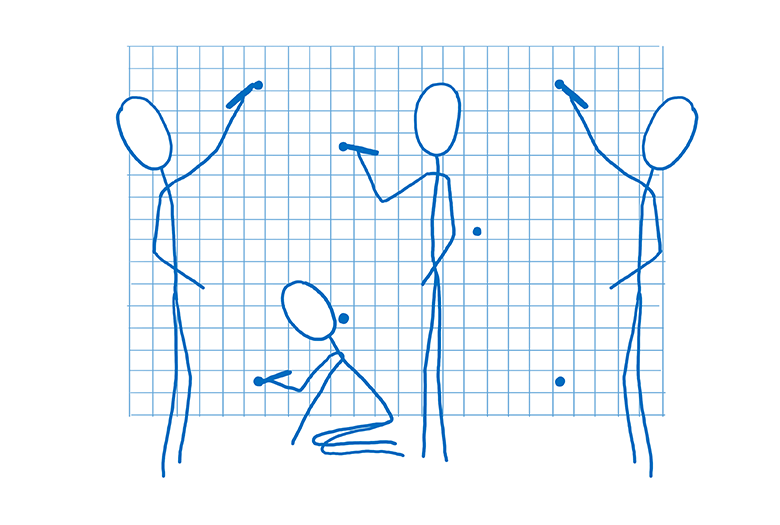

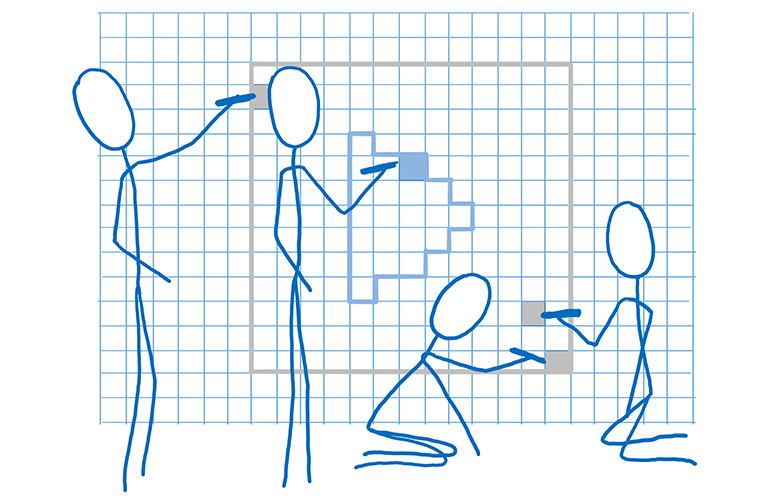

Pages in flipbook made out of graph paper. There are lots and lots of little squares, and each square can contain only one color.

The task of the renderer is to fill the squares in the graph paper. When they are all filled, the frame rendering is finished.

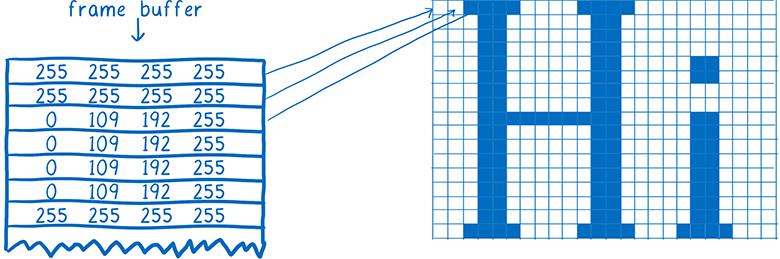

Of course, your computer does not have this graph paper. Instead, the computer includes a memory area called the personnel buffer. Each memory address in the framebuffer is like a square on the graph paper... it corresponds to a pixel on the screen. The browser fills in each cell with numbers that correspond to the values of RGBA (red, green, blue and alpha).

When the screen needs to be updated, it accesses this memory region.

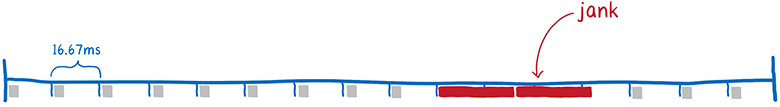

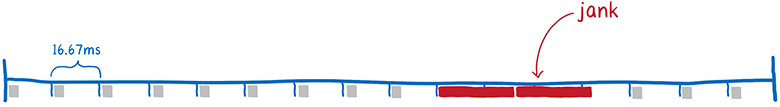

Most computer displays are refreshed 60 times per second. That's why browsers are trying to give 60 frames per second. This means that the browser has all of 16.67 milliseconds for all the work: analysis of CSS styles, layout, rendering and filling of all slots in framebuffer by numbers that correspond to colors. This time interval between two frames (16,67 MS) is called the budget frame.

You may have heard how sometimes people mention dropped frames. Missed frame is when the system does not fit in the budget. Display trying to get a new frame from the frame buffer before the browser has finished work on his display. In this case, the display again shows the old version of the frame.

Missed frames can be compared to torn from the pages of a flipbook. The animation starts to freeze and twitch, because you have lost the intermediate from the previous page to the next.

So you need to have time to put all the pixels in the HR buffer before the display again to check it out. Look at how the browser has used to cope with this and how technology has changed over time. Then we can figure out how to speed up the process.

the

note. Rendering and layout is the part where the rendering engines in the browsers most strongly differ from each other. Single-platform browsers (Safari and Edge) work a bit like a multi-platform (Firefox and Chrome).

Even the earliest browsers had performed some optimizations to speed up rendering of pages. For example, when you scroll the page the browser was trying to move already-rendered part of the page, and then draw the pixels on a vacant site.

The process of computing what has changed and then update only the changed elements, or pixels, is called invalidation.

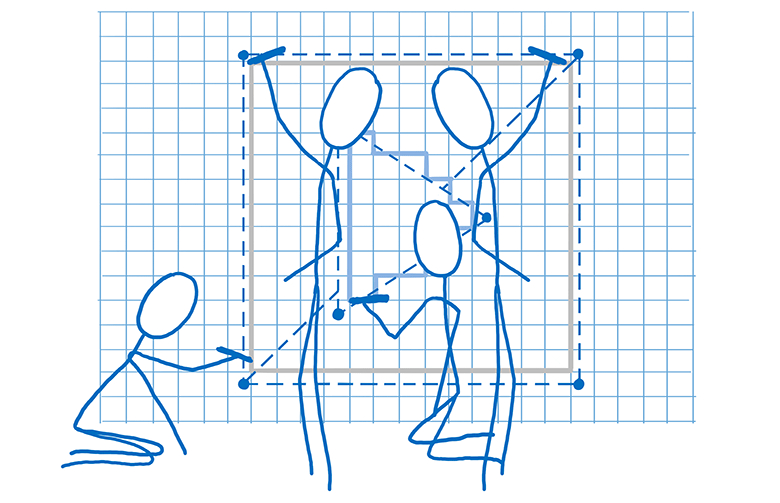

Over time, browsers have begun using more advanced techniques is invalidated, such invalidation rectangles. This evaluates the minimum box around the changed region of the screen and then updates only the pixels within these rectangles.

Here is really greatly reduced the amount of computation if the page is changing only a small number of elements... for example, only a blinking cursor.

But that's not much help if you are changing large parts of the page. For such cases had to invent new technology.

the

Using layers helps a lot when changing large parts of the page... at least in some cases.

Layers in browsers similar to layers in Photoshop or the layers of thin smooth paper that were previously used for drawing cartoons. In General, the various elements of the page you draw on different layers. Then put these layers on top of each other.

For a long time, browsers used layers, but they are not always used to accelerate the rendering. At first they just used to ensure the correct rendering of the elements. They carried out the so-called "positioning context" (stacking context).

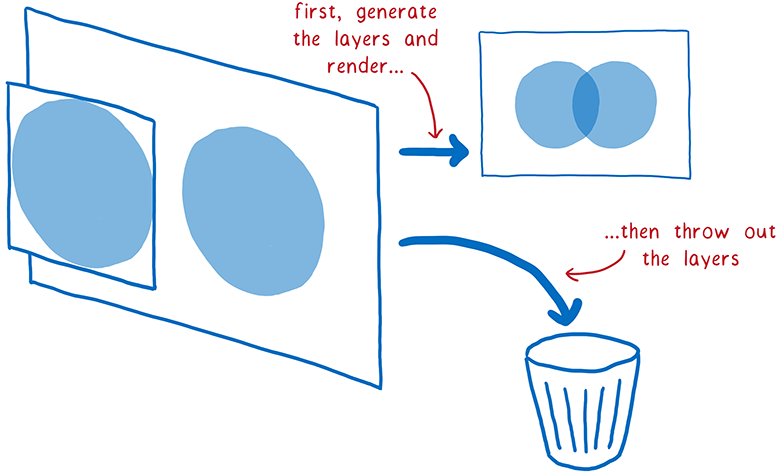

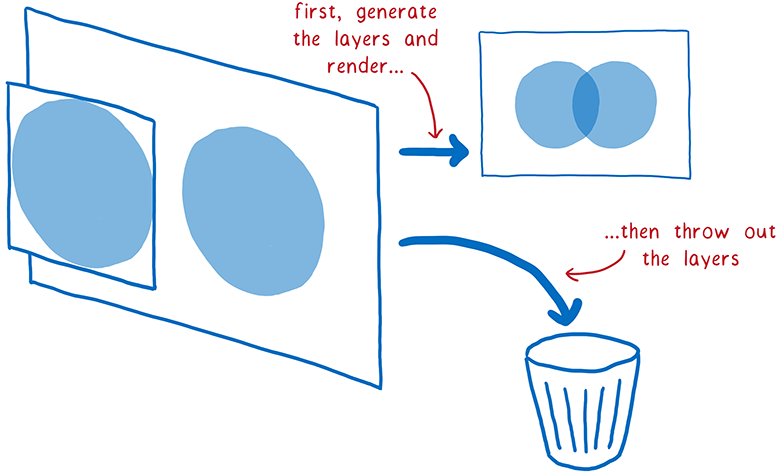

For example, if you have on the page a translucent element, then it must be in its own reference context. This means that he has his own layer to be able to mix its color with the color of the underlying element. These layers are discarded as soon as finished rendering the frame. In the next frame layers had to draw again.

But some of the elements on these layers does not change from frame to frame. For example, imagine a normal animation. The background does not change even if moving characters in the foreground. Much better to save a layer with the background and just re-use it.

It did so browsers. They began to save layers, updating only the changed. And in some cases the layers did not change. They just need a little move — for example, if the animation moves across the screen, or in the case of the scroll element.

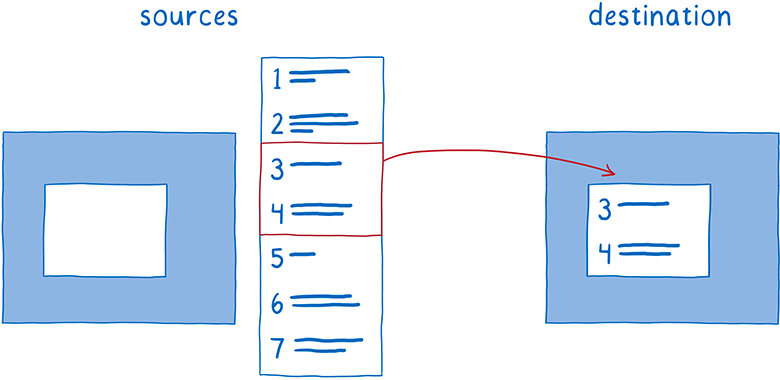

This process of joint arrangement of layers is called a layout. The linker works with the following objects:

the

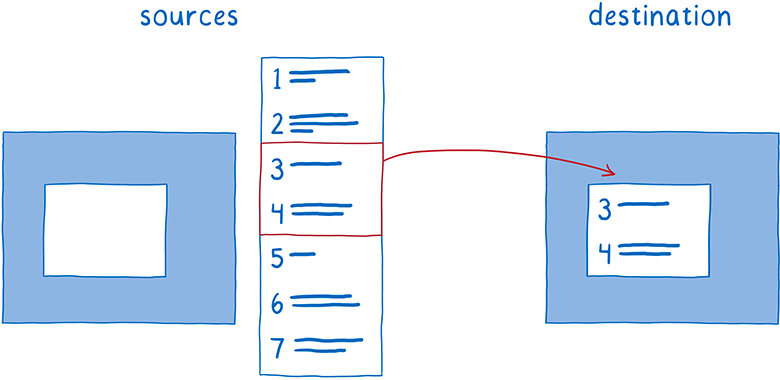

First, the linker copies the background on the target bitmap.

Then he must find out what part of the scrollable content to show. He will copy this part over target bitmap.

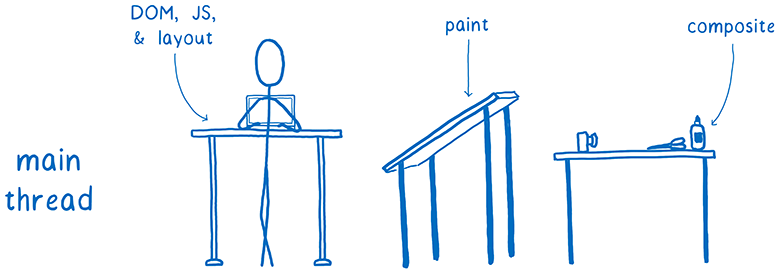

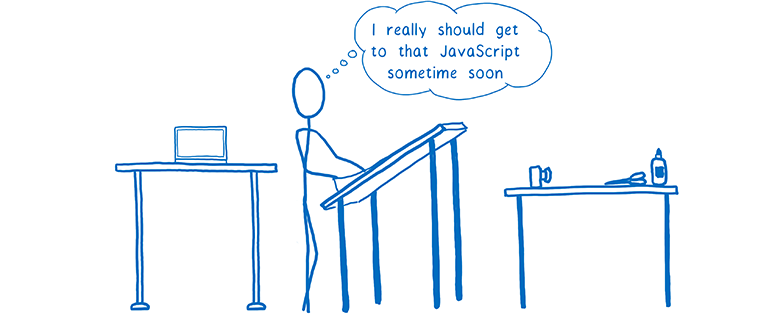

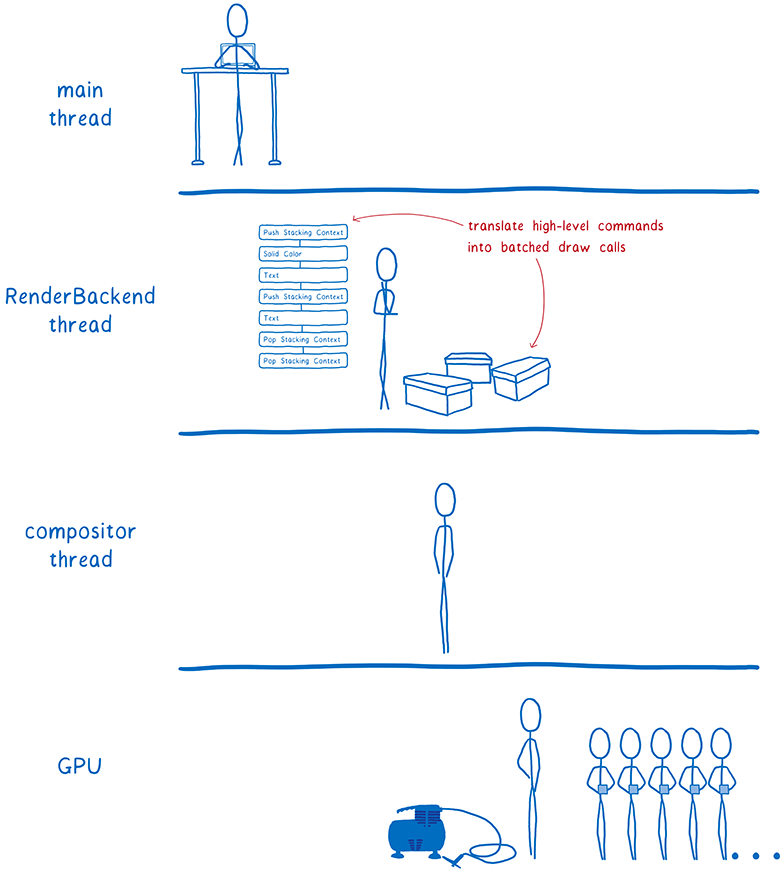

This reduces the volume rendering in the main thread. But the main stream still spend a lot of time on the layout. And there are a lot of processes fighting for resources in the main thread.

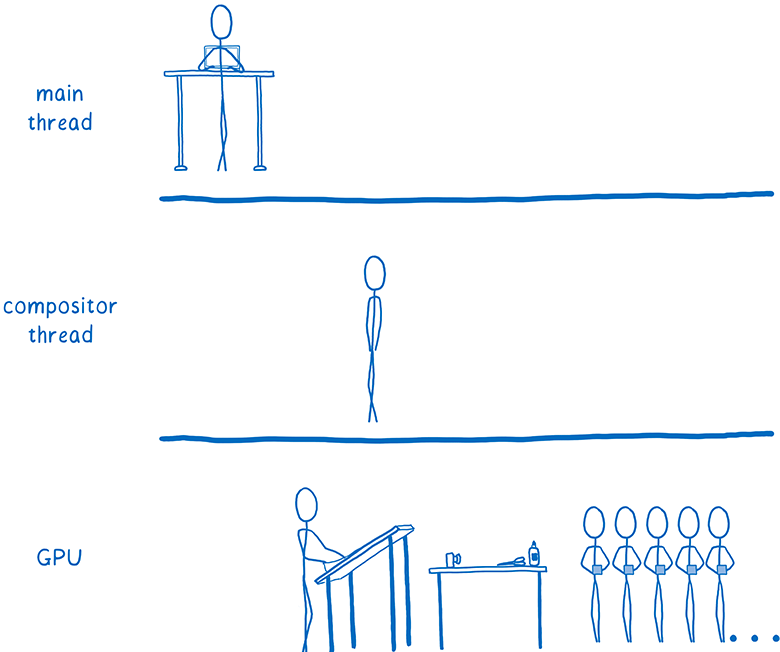

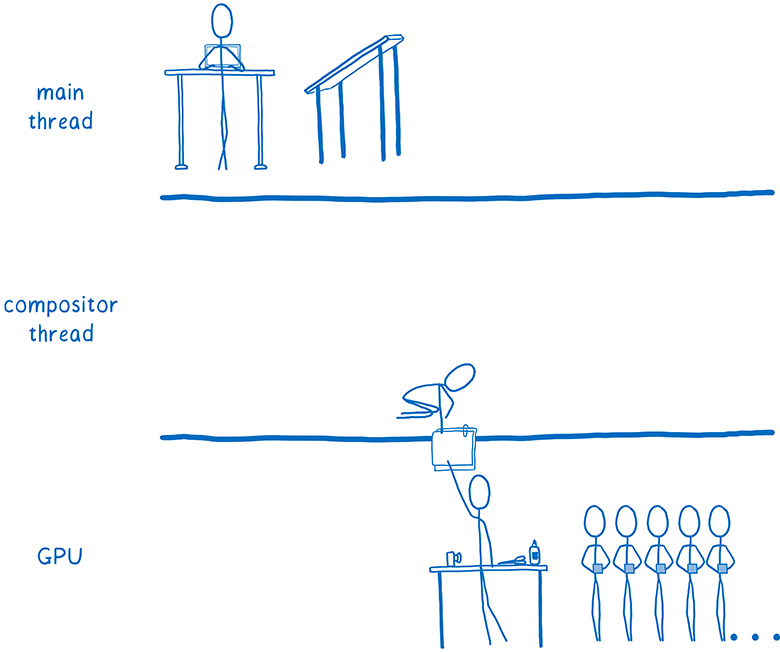

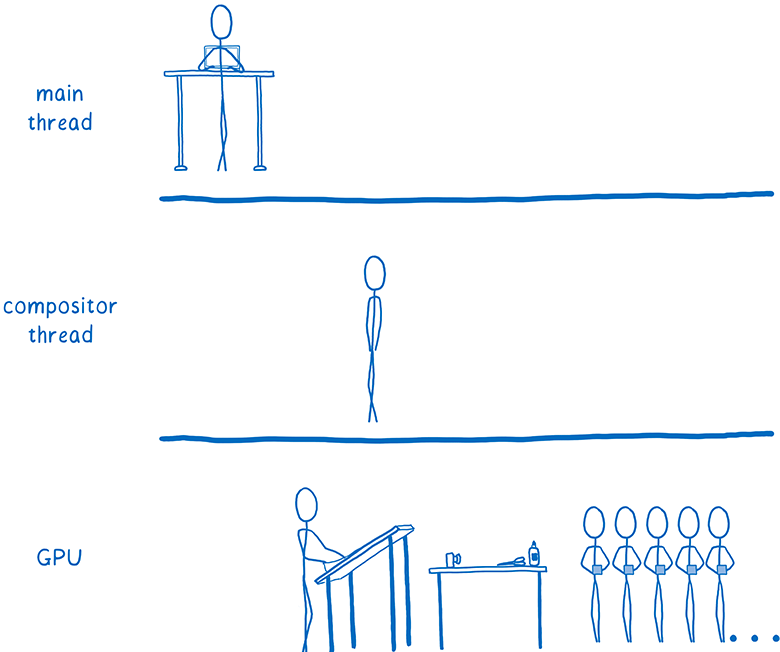

I gave this example before: the main flow is similar to full-stack developer. He is responsible for the DOM, layout and JavaScript. And he is also responsible for rendering and layout.

Every millisecond spent in the main thread for rendering and layout — this time taken from JavaScript or layout.

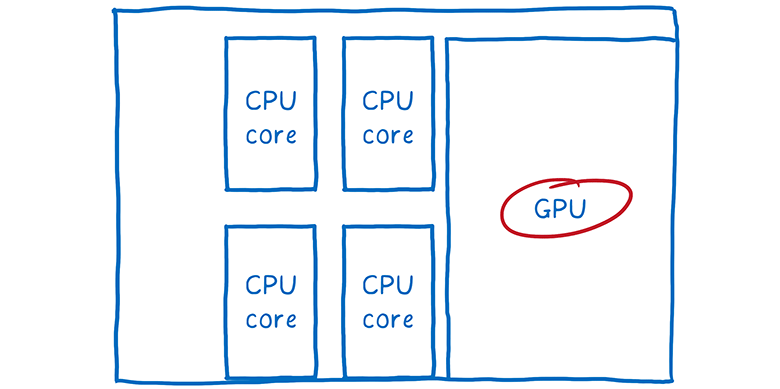

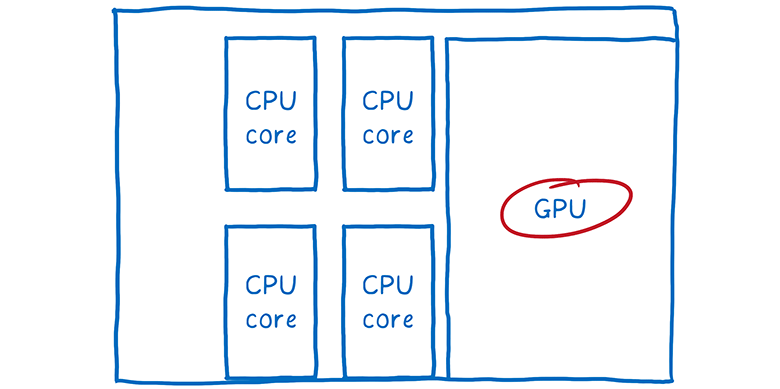

But we have another hardware that sits there and does almost nothing. And it's specially designed for graphic processing. We are talking about a GPU that games from the 90-ies used for fast rendering of frames. And since then, GPUs have become more and more powerful.

the

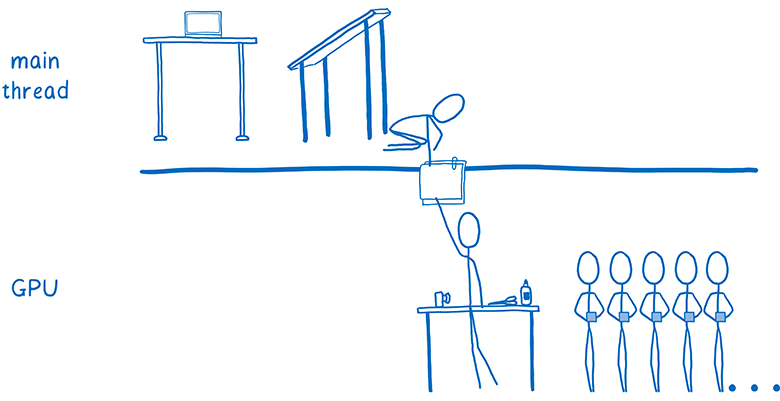

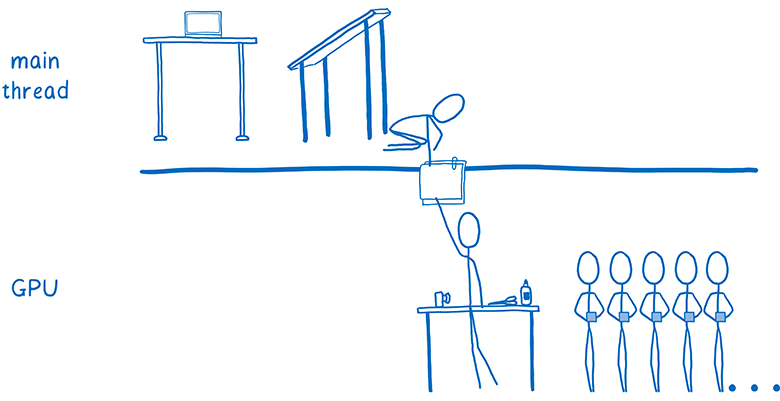

So the browser developers started to hand the work off to the GPU.

In theory, GPU can transfer two problems:

The rendering can be difficult to transfer to the GPU. So usually multi-platform browsers leave this task to the CPU.

However, the GPU can very quickly build, and this problem is easy to pin on him.

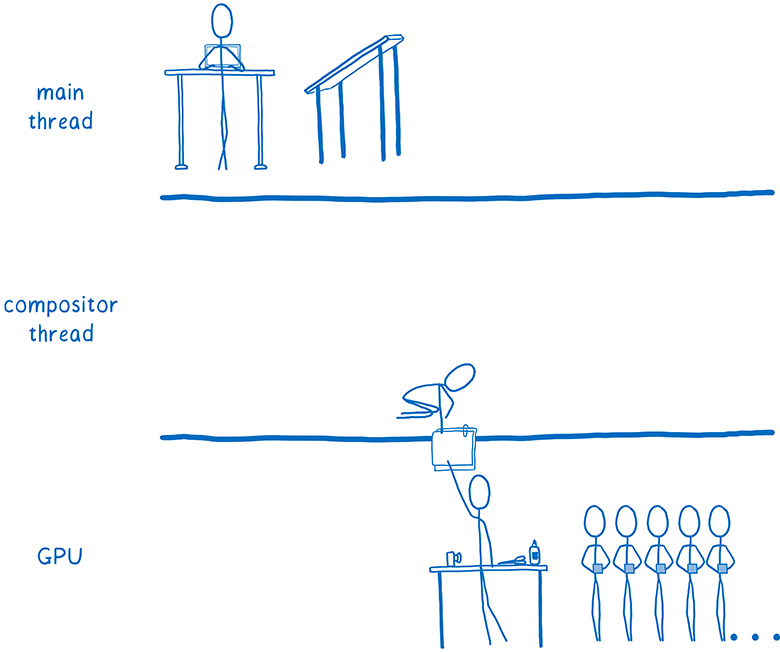

Some browsers even more boost parallelism by adding the flow of the linker on the CPU. He becomes the Manager of all work in the layout that executes on the GPU. This means that if the main thread busy (e.g., performs JavaScript), the flow Builder is still active and is doing work that is visible to the user, like scrolling content.

That is the whole work on the layout goes from the main thread. However, there remains a lot of things. Every time you need to redraw the layer, it makes the main stream, and then transfers the layer to the GPU.

Some browsers have moved and drawing in additional flow (we are now in Firefox is also working on it). But it is faster to send, and this last piece of the calculation — drawing — directly on the GPU.

the

So, browsers have started to transfer to the GPU and drawing too.

This transition is still ongoing. Some browsers do all the rendering on the GPU, while in others this is only possible on certain platforms (e.g. on Windows or only on mobile devices).

Rendering on the GPU has led to several consequences. It allowed the CPU to devote time to tasks like JavaScript and layout. Besides, the GPU is much faster to draw pixels than the CPU, so that accelerated the entire process of rendering. Also decreased the amount of data that must be transferred from CPU to GPU.

But maintaining this separation between drawing and layout still requires some spending, even if both processes run on the GPU. This division is also a limit in the options optimizations to speed GPU.

That's where comes in WebRender. It fundamentally changes the way rendering, reducing the difference between the rendering and layout. This allows you to customize the performance of the renderer to the demands of modern web and prepare it for situations that will appear in the future.

In other words, we would not only speed up the rendering of frames... we wanted them to renderring more stable, without slowdown. And even if you want to render a lot of pixels, like the virtual reality helmets WebVR in 4K resolution, we still want smooth playback.

the

The above optimization helped in some cases to speed up the rendering. When the page is changed the minimum of elements — for example, flashing of a course, the browser makes the minimum possible amount of work.

After laying out of pages into layers, the number of such "ideal" scenarios increased. If you can just draw some layers, and then just move them relative to each other, the architecture of the "rendering + layout" copes.

But layers have disadvantages. They take up a lot of memory and sometimes can slow down the rendering. Browsers must combine layers where it makes sense... but it's hard to determine exactly where it makes sense and where not.

So if the page moves to many different objects, you will have to create a bunch of layers. The layers takes too much memory and passed to the linker takes too much time.

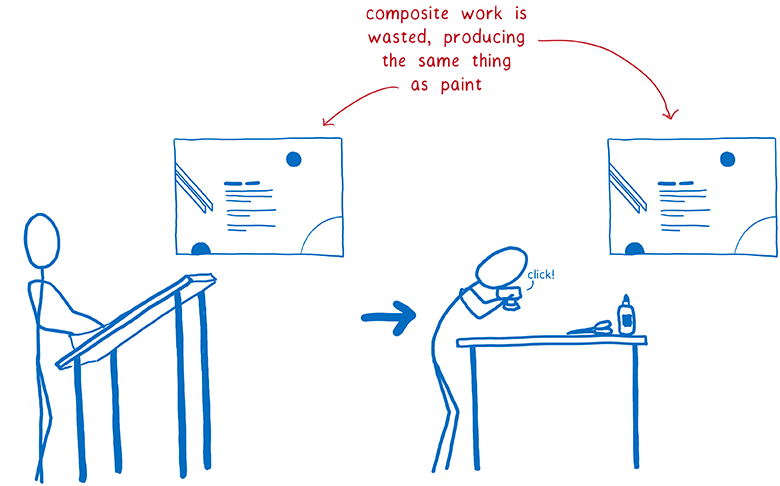

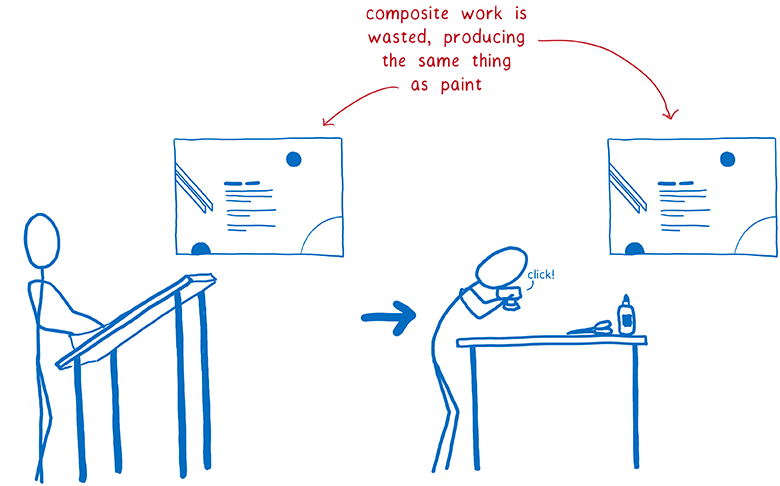

In other cases, it turns out one layer where there must be some. The single layer will be continuously redrawn and passed to the linker, which then links it, without changing anything.

That is, efforts to render odevautsa: each pixel is processed twice without any necessity. It would be faster just to render the page directly, bypassing the link step.

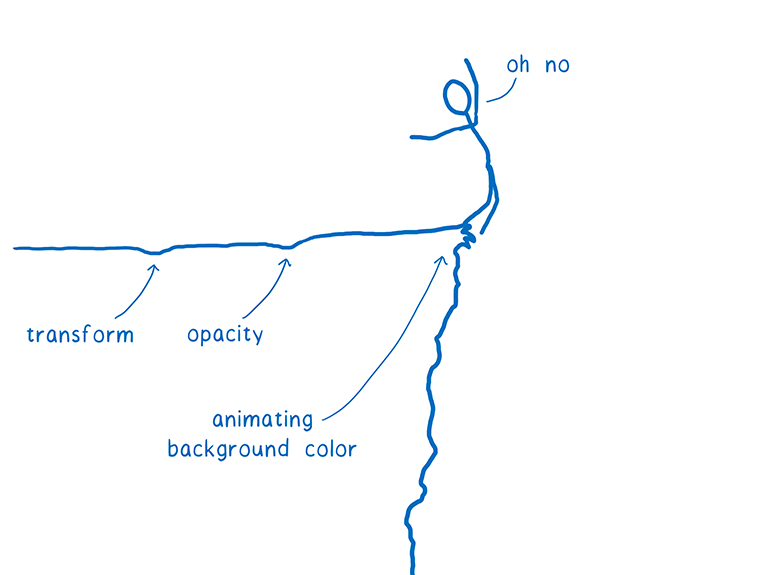

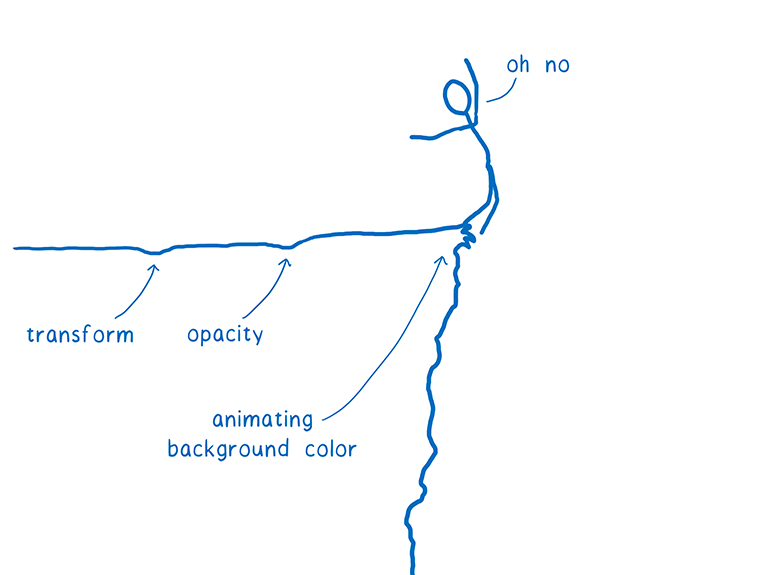

There are many cases when the layers are just useless. For example, if you have animated background, the entire layer will still have to be redrawn. These layers help only with a small number of CSS properties.

Even if the majority of frames fit in the optimal scenario — that is, take only a small part of the budget of the frame — the motion of objects may still remain intermittent. To perceive by the eye jerks and freezes, enough loss just a couple of frames that fit into the worst-case scenario.

These scripts are called cliffs performance. The app works like normal, until you face one of these worst-case scenarios (like animated backgrounds) — and the framerate suddenly drops to the limit.

But you can get rid of these cliffs.

How to do it? Let us follow the example of game 3D-engine.

the

What if we stop to wonder what layers do we need? If you delete this intermediate stage between the drawing and the layout and just go back to rendering each pixel in each frame?

It may seem a ridiculous idea, but some places use this system. In contemporary video games redrawn every pixel, and they keep the 60 fps is safer than browsers. They do it in an unusual way... instead of creating these rectangles for invalidation and layers that minimize area to redraw, just updated the entire screen.

Whether rendering a web page in this way is much slower?

If we do the rendering on the CPU, Yes. But the GPU is specifically designed for such work.

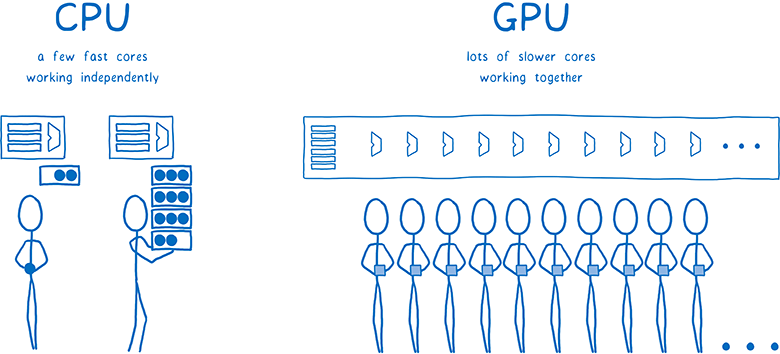

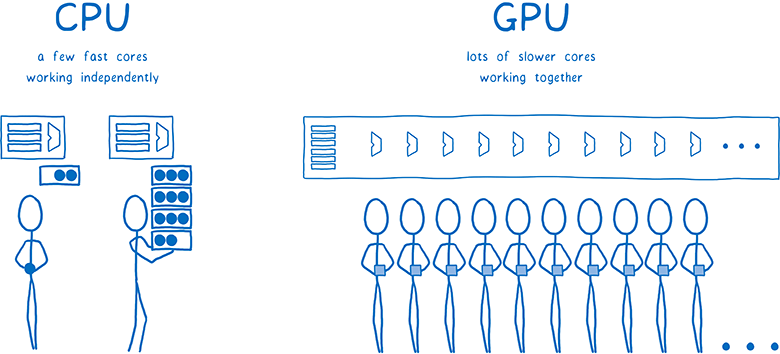

The GPU is built with maximum parallelism. I was talking about the parallelism in his latest article about Stylo. Thanks to parallel processing computer performs several tasks simultaneously. The number of simultaneous tasks is limited by the number of cores in the processor.

The CPU is usually from 2 to 8 cores, and GPU at least several hundred, and often more than 1000 cores.

However, these kernels work slightly differently. They can't function independently as a core of the CPU. Instead, they usually perform some joint task, running a single instruction on different pieces of data.

This is exactly what we need when filling in pixels. All the pixels can be distributed to different cores. Since the GPU works with hundreds of pixels at a time, then filling the pixels, it performs much faster than the CPU... but only if all cores are loaded with work.

Since the kernel should work on one task at the same time, the GPU has a fairly limited set of steps to perform, and their software interfaces is very limited. Let's see how it works.

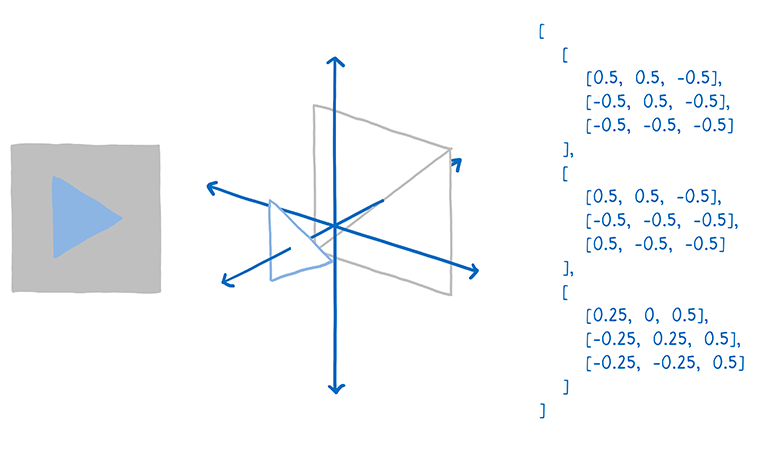

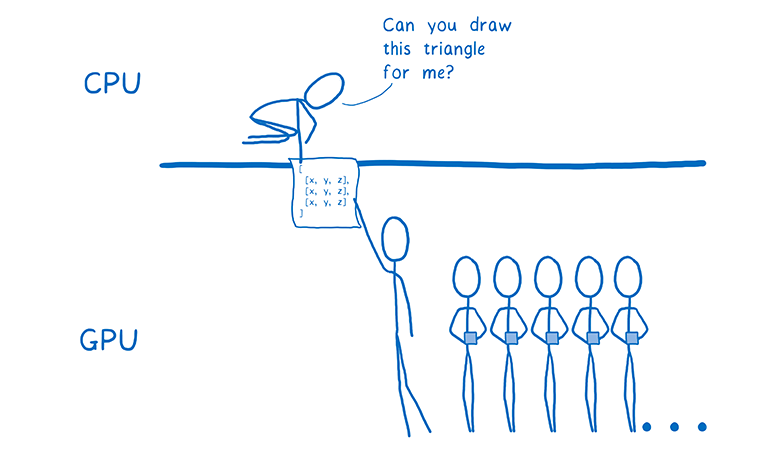

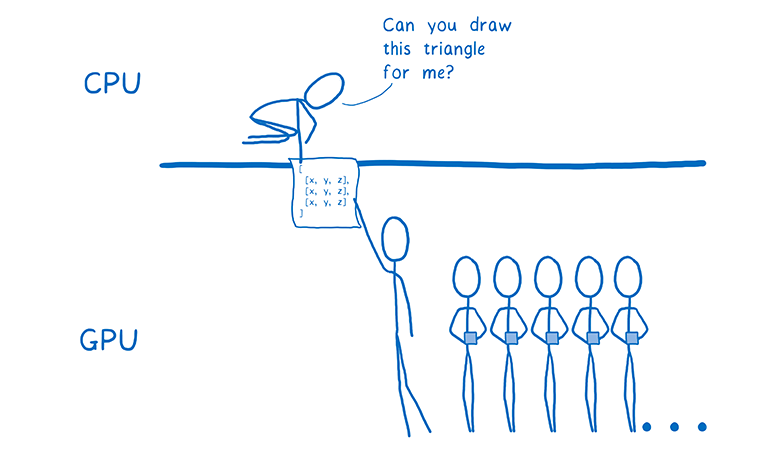

The first thing you need to specify the GPU what to render. This means to give them form and instructions for completing them.

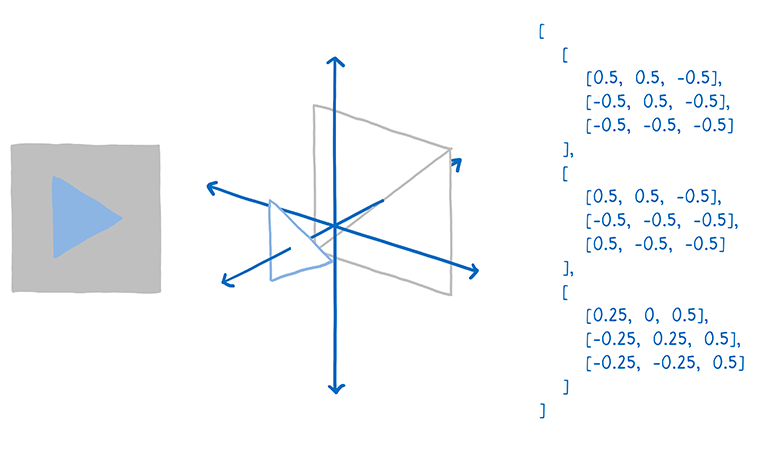

To do this, you break the whole drawing into simple shapes (usually triangles). These forms are in 3D space, so that some may obscure others. Then you take the tops of all the triangles and make in the array of their coordinates x, y, z.

Then send the command the GPU to render these shapes (draw call).

From this point, the GPU begins to work. All cores are to perform one task at a time. They will do the following:

The last step is different. To issue specific instructions with a GPU running a special program called "pixel Shader". The shading of pixels is one of the few elements of the functionality of the GPU, which you can program.

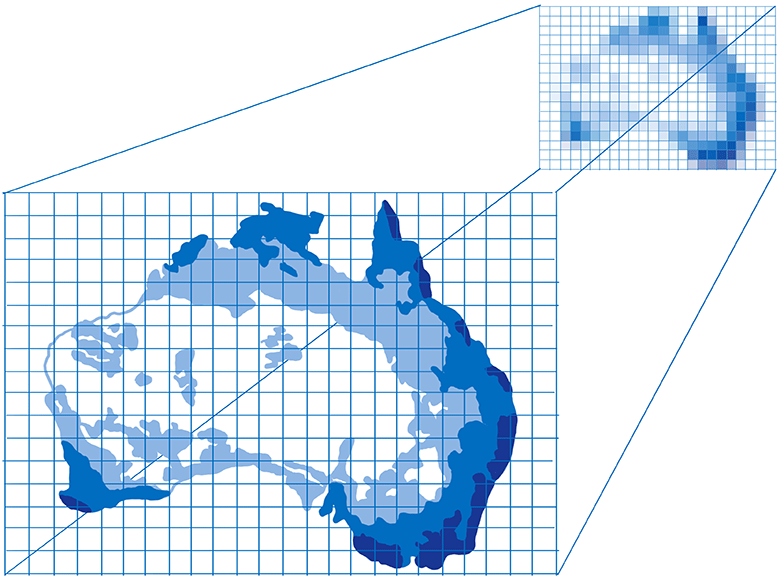

Some pixel shaders is very simple. For example, if the entire figure is colored in a single color, the Shader just needs to assign the color to each pixel of the shape.

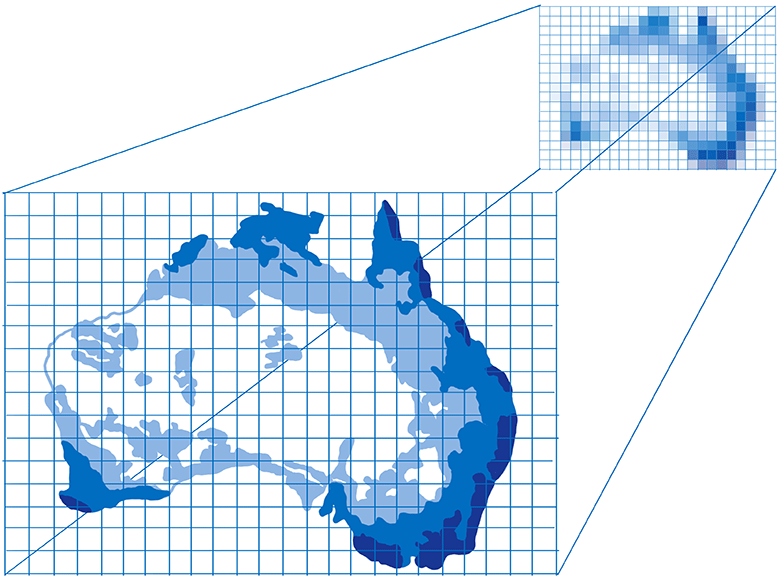

But there are more complex shaders, for example, in the background image. Here will have to figure out which parts of an image correspond to which pixel. This can be done in the same way as the artist scales the image, increasing or decreasing it... put over the image grid with squares for each pixel. Then take the color samples inside each box and determine the final pixel color. This is called the overlay texture (texture mapping), the image (called texture) is overlaid on the pixels.

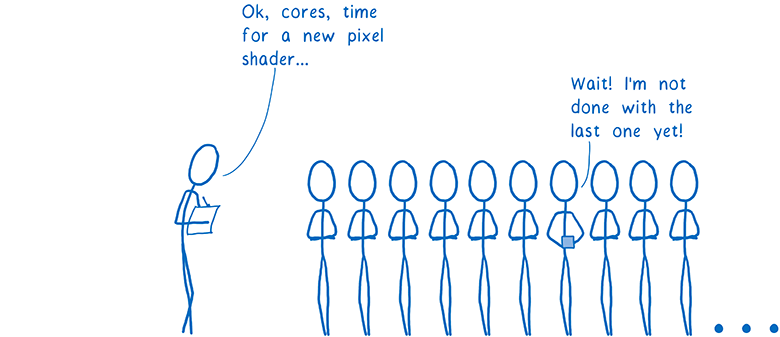

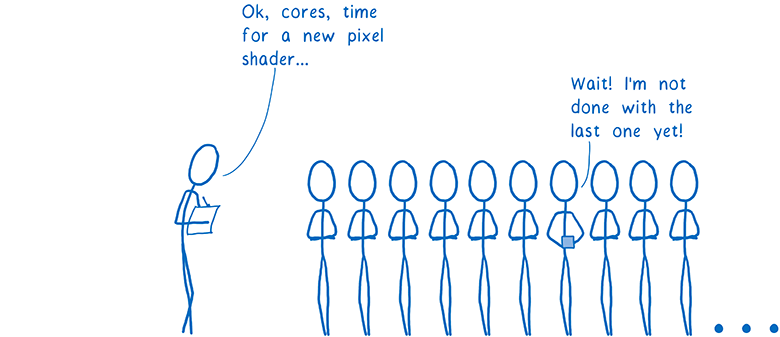

The GPU will access to the pixel Shader for every pixel. Different cores work in parallel on different pixels, but all of them need the same pixel Shader. When you instruct the GPU to render a shape object, you simultaneously specify which to use a pixel Shader.

Almost all the web pages different parts of the page require different pixel shaders.

Since the Shader works on all pixels specified in a command for a drawing, you usually need to divide the team into several groups. They are called packages. To the maximum load of all cores, you need to create a small number of packages with a large number of pieces in each of them.

So, the GPU distributes the work of hundreds or thousands of cores. All because of the exceptional overlap in the rendering of each frame. But even with this particular parallelism is still a lot of work. To the formulation of the task must be approached with the mind to achieve decent performance. Here comes in WebRender...

the

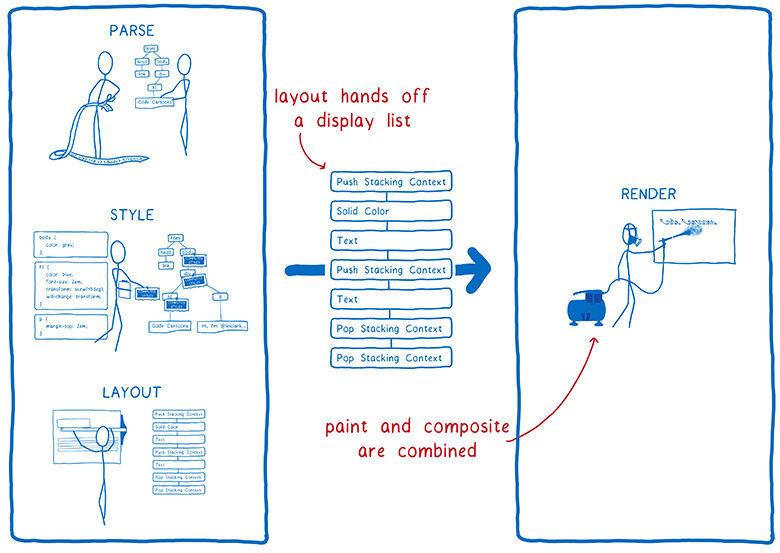

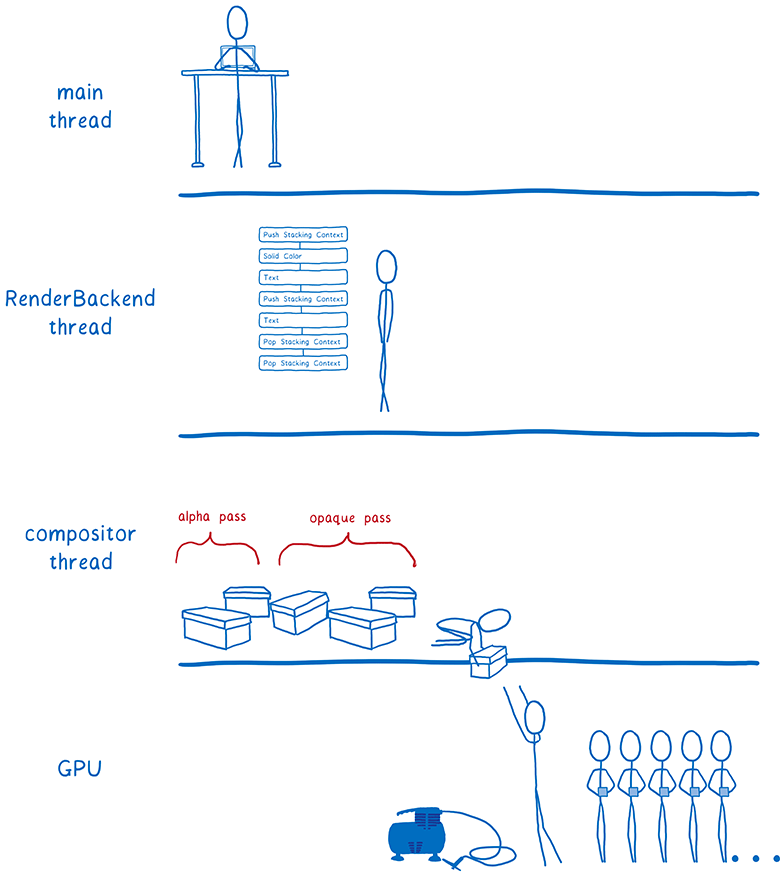

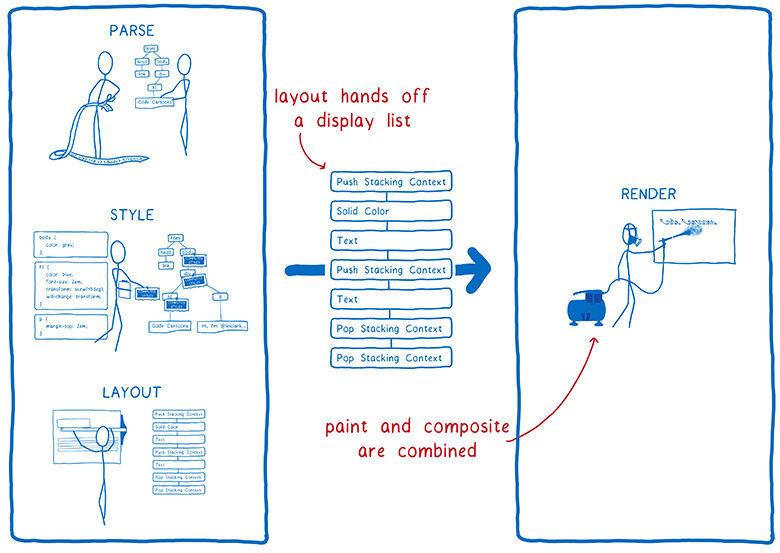

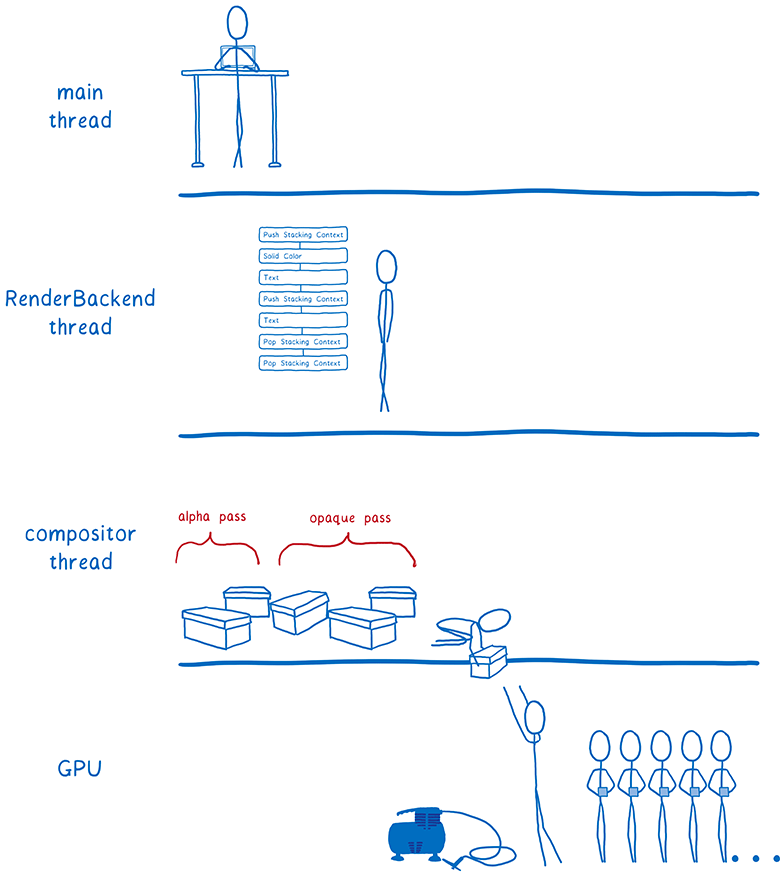

Let's remember what the steps taken by the browser to render the page. Here, there were two changes.

The display list is a set of high-level rendering instructions. It indicates that you want to render without using the specific instructions for a particular graphics API.

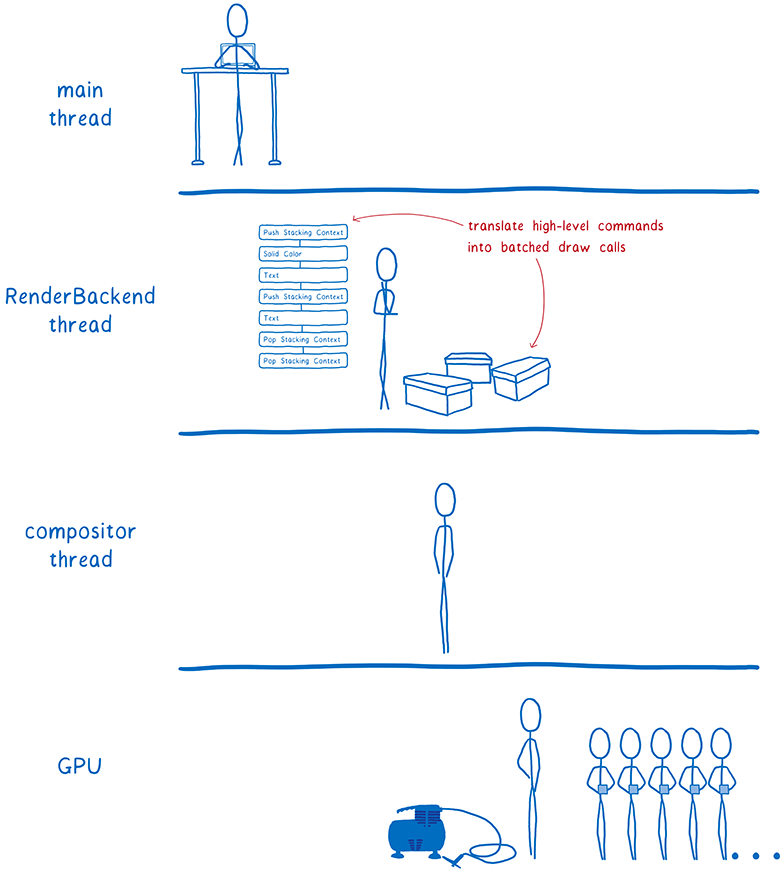

As soon as you want to render something new, the main thread transmits the display list to RenderBackend — WebRender is the code running on the CPU.

Task RenderBackend — get a list of the top-level rendering instructions and convert it into commands for the GPU, which are combined into packages for faster execution.

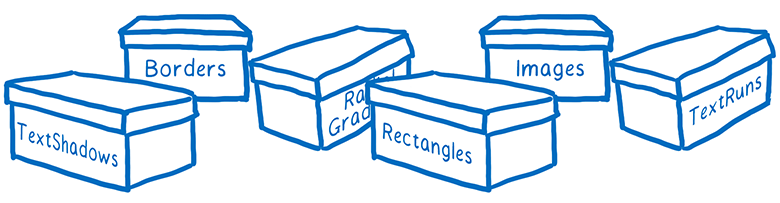

RenderBackend then transmits these packets into the stream of the linker, which transmits them further to the GPU.

RenderBackend wants the team to the rendering performed on the GPU with maximum speed. This applies to several different techniques.

the

The best way to save time — not work at all.

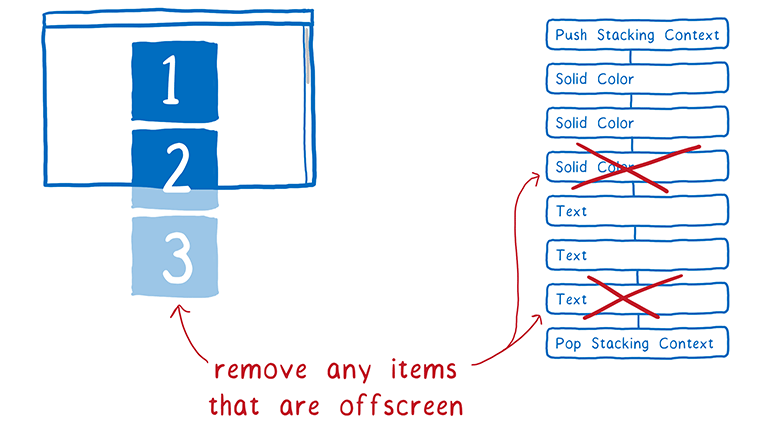

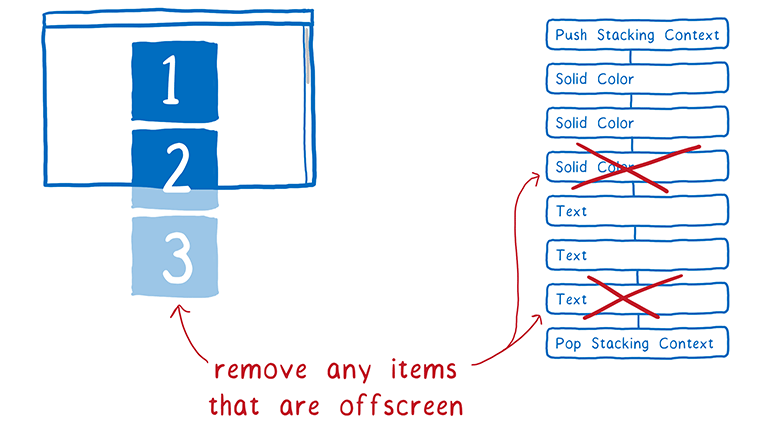

The first thing RenderBackend reduces the display list. It determines which elements of list will be actually displayed on the screen. For this, he looks far from the window in the scrolling list is an element.

If the figure falls within the window, it is included in the display list. But if no part of the figure does not fall here, then it is excluded from the list. This process is called early culling (early culling).

the

Now our tree contains only the desired shape. This tree is organized in positional contexts, which we talked about earlier.

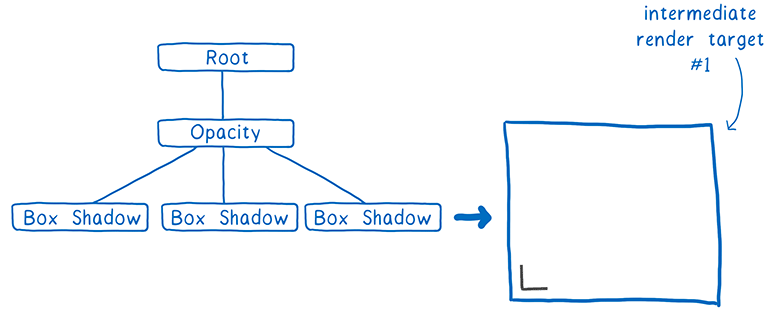

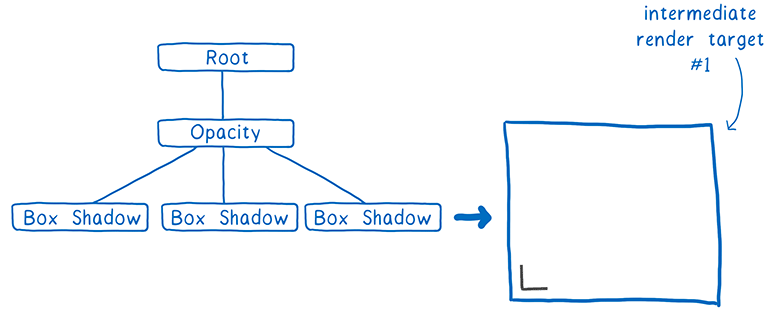

Effects like CSS filters and positional contexts somewhat complicate the matter. For example, you have an element with opacity of 0.5, and he has a child element. You may think that all child elements transparent too... but in reality transparent the whole group.

Because of this, you must first bring the band to the texture, with full transparency of each square. Then, placing it in the parent object, you can change the transparency of the entire texture.

Positional contexts can be nested within each other and the parent object may belong to a different positional context. That is, it will need to draw on one of the intermediate texture, and so on.

The allocation of space for these textures is costly. We would like to possible to fit all the objects on the same intermediate structure.

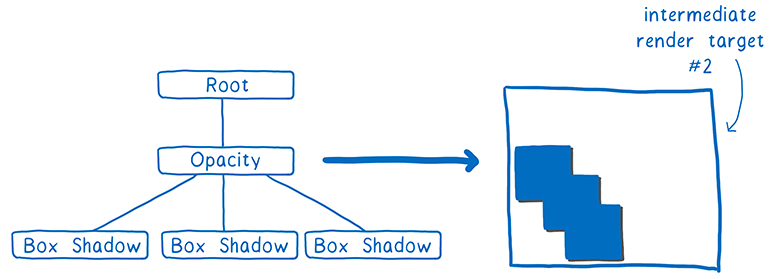

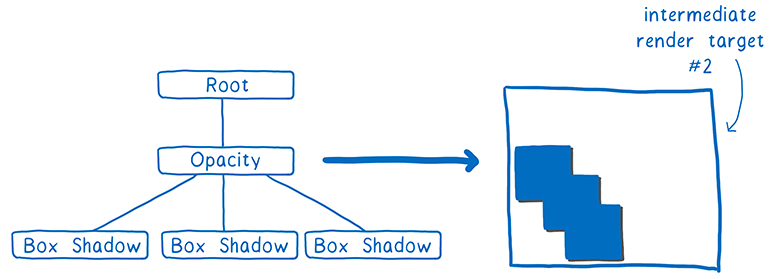

To help the GPU to cope with the task, create a task tree for rendering. It tells which texture you need to create before other textures. Any textures that are independent of others, can be created in the first pass, that is, they can then combine the intermediate structure.

So in the above example with translucent squares we painted the first pass one corner of the square. (Actually a bit more complicated, but the essence of it).

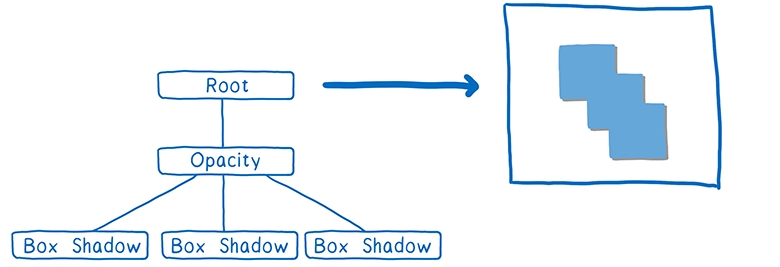

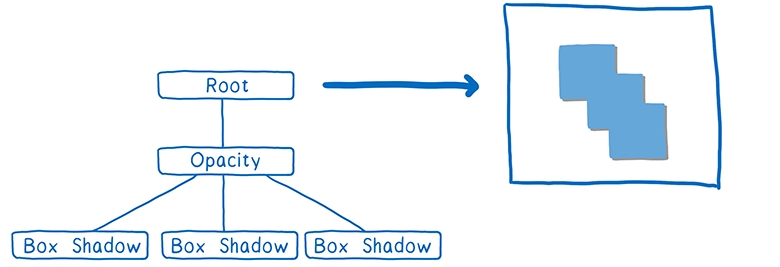

Second pass can duplicate this angle for the entire square and paint over it. Then render the group's opaque squares.

Finally, it remains only to adjust the transparency of the texture and place it in the appropriate place of the final texture that will be displayed.

Building a task tree for rendering, we find the minimum possible number of objects rendering to the screen output. That's good, because I mentioned that the space allocation for these textures is costly.

The task tree also helps to combine tasks in packages.

the

As we have said, you need to create a small number of packages with a large number of pieces in each of them.

Careful package allows us to greatly speed up the rendering. Need to fit in the package as many objects. Such a requirement is put forward for several reasons.

First, when CPU did not give the GPU a command to render, the CPU is always many other tasks. He needs to take care of things like setting the GPU, loading the Shader program and check for different hardware bugs. All this work accumulates, and while it makes the CPU, GPU can stand.

Secondly, there are certain costs to the state change. For example, between the packages you need to modify the Shader state. On a normal GPU will have to wait until all the kernel finish the job from the current Shader. It's called cleaning the pipeline (draining the pipeline). While the conveyor is not cleaned, the rest of the kernel will be transferred to the standby mode.

Because of this, it is desirable to fill the package as tightly as possible. For a desktop PC it is desirable to leave at least 100 rendering commands for each frame, and each team would be good to put thousands of vertices. So squeeze the maximum out of parallelism.

Look at every passage in the task tree for rendering and which tasks grouped together in one package.

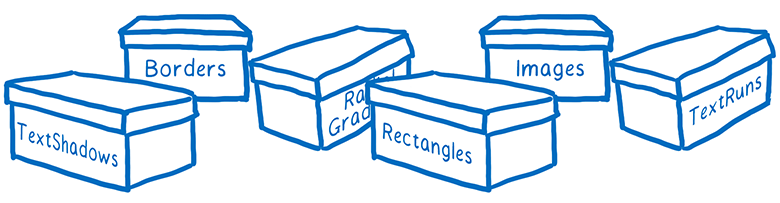

At this time, each type of entities requires a different Shader. For example, there is a Shader borders, text Shader and Shader images.

We believe it is possible to combine many of these shaders that will allow to create more packages, although they are now well grouped.

The task is almost ready to be sent to the GPU. But there is still a bit of work, from which it is possible to get rid of.

the

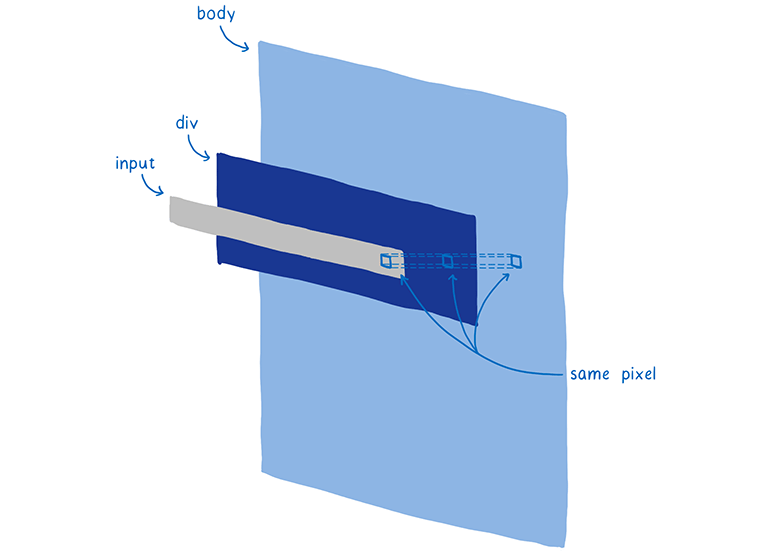

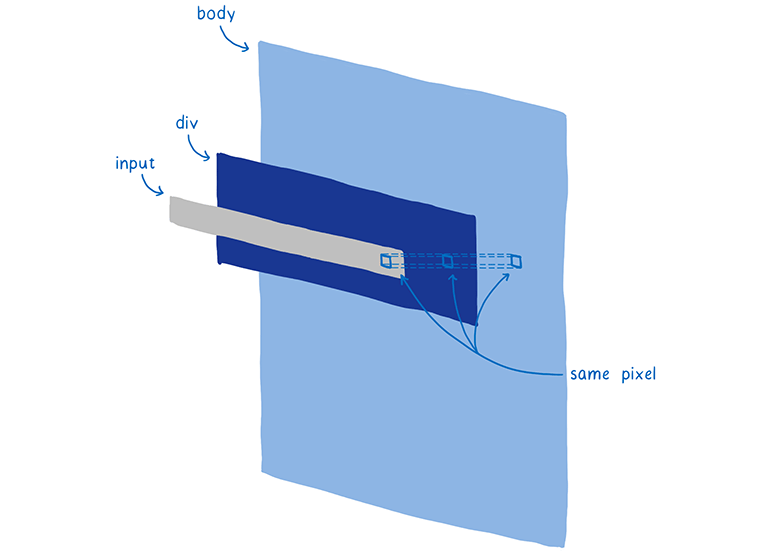

Most web pages contain many figures overlapping. For example, the text field is on top of a div (with a background) that is on top of body (with another background).

When determining the color of a pixel, the GPU could calculate the pixel color in each figure. But shown is only the top layer. It's called the repaint (overdraw), a waste of GPU time.

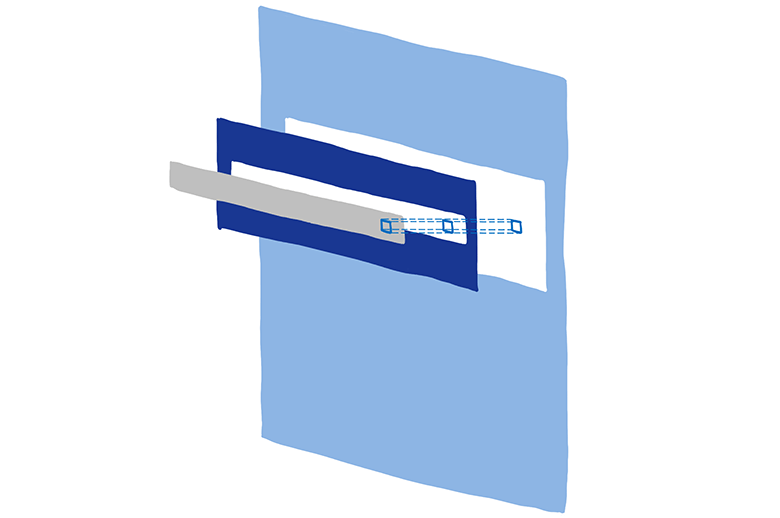

So you can first render the top layer. When it's turn to render the pixel for the next figure, we check whether there is already a value at that pixel. If so, the extra work is not performed.

However, there is a small problem. If the figure is translucent, then you need to mix the colors of two shapes. And to make it look right, the rendering should be from the bottom up.

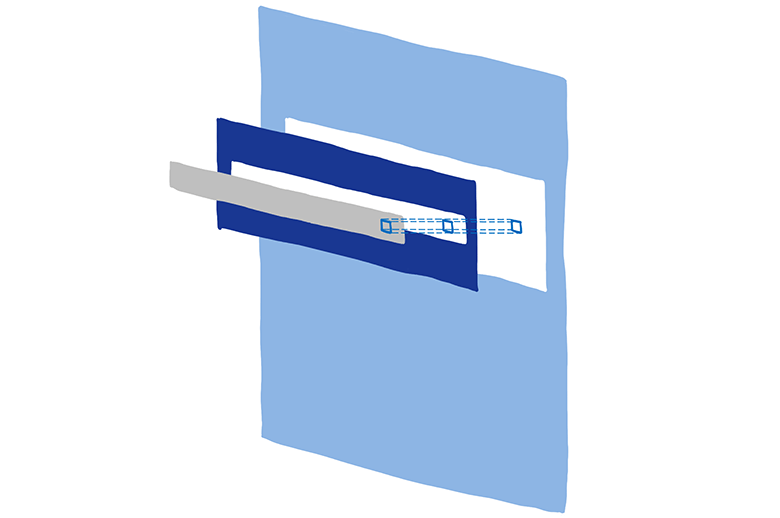

So we divide the work in two passes. First pass through the opacity. Will render the top down all the opaque shapes. Skip rendering of all the pixels which are closed to others.

Then proceed to translucent figures. They are drawn from the bottom up. If a pixel is translucent over opaque, their colors are mixed. If he is behind opaque, it is not calculated.

The division into two passes — opacity and alpha channel — with the further omission of unnecessary calculation of pixels is called Z-culling (Z-culling).

Although this may seem like an easy optimization, here we get a lot of benefits. On a typical web page and significantly reduces the number of pixels to be processed. We are now looking for ways to move more tasks to the passage through the opacity.

At the moment we have prepared the frame. We have done everything possible to remove excess.

the

Graphics processor is ready to setup and rendering packages.

the

The CPU still performs some work on the rendering. For example, we still will render on the CPU the symbols (called glyphs) in the text boxes. There is a possibility to do it on the GPU, but it is difficult to achieve pixel-by-pixel matching with the glyphs that the computer renders in other apps. So people may be confused when the text rendering on the GPU. We're experimenting with moving the rendering of the glyphs on the GPU in the framework of the project Pathfinder.

But now these things are rendered in the bitmap on the CPU. Then they are loaded into texture cache on the GPU. This cache is maintained from frame to frame, because usually change is not happening.

Even though this drawing remains on the CPU, there is still potential for acceleration. For example, when rendering characters in the font we distribute a variety of symbols across all cores. This is done using the same technique that is used Stylo for parallelizing styles... the interception work.

the

In 2018 we plan to introduce WebRender in Firefox in the composition of the Render Quantum in a few releases after the initial release of Firefox Quantum. After that, existing web pages will work smoothly. And the Firefox browser will be ready for a new generation of high-resolution displays in 4K, since the rendering performance is extremely important when the number of pixels on the screen.

But WebRender is not only useful for Firefox. It is also necessary in our work on WebVR where you want to render different frames to each eye at a speed of 90 FPS at 4K resolution.

The first version WebRender is already available in Firefox if you manually activate the corresponding flag. The work on integration is ongoing, so productivity is not so high as to be in the final release. If you want to watch the development WebRender, follow GitHub repository or Twitter Firefox Nightly where the weekly news for the entire project Render Quantum.

About the author: Lin Clark — engineer of Mozilla Developer Relations group. It works with JavaScript, WebAssembly, Rust and Servo, and programmer likes to draw pictures.

Article based on information from habrahabr.ru

But there is another bigger part of Servo technology, which is not yet part of the Quantum Firefox, but will soon enter. WebRender is a part of project Quantum Render.

WebRender is known for its exceptional speed. But the main task — not to speed up rendering and make it more smooth.

In the development WebRender we set the goal that all applications run at 60 frames per second (FPS) or better, regardless of the display size or the size of the animation. And it worked. Pages that puff to 15 FPS in the current Chrome or Firefox, fly at 60 FPS when running WebRender.

As WebRender does it? It fundamentally changes the principle of operation of the rendering engine, making it more like a 3D engine game.

We will understand what it means. But first...

the

What does the renderer?

article on Stylo, I explained how the browser goes from the parsing of the HTML and CSS to pixels on the screen, and most browsers do it in five steps.

These five stages can be divided into two parts. The first of these is, in fact, a plan. To plan the browser parses the HTML and CSS, taking into account information like the size of the viewport to find out exactly what to look for in each element — its width, height, color, etc. the End result is what is called "tree frames" (frame tree) or "tree visualization" (render tree).

In the second part — drawing and layout — kicks in the renderer. He takes the plan and turns it into pixels on the screen.

But the browser is not enough to do it only once. He has again and again to repeat the operation for the same web page. Every time the page something changes- for example, open div to switch the browser again you have to repeatedly run through all the steps.

Even if the page nothing changes — for example, you just do scrolling, or select the text browser still needs to perform rendering operations to draw new pixels on the screen.

Below the scrolling and the animation was smooth, they must be updated at 60 frames per second.

You could have previously heard that phrase — frames per second (FPS) — unsure what it means. I present them as a flip book. It's like a book with static images that you can quickly browse through so that it creates the illusion of animation.

To animation in this flipbook looked smooth, you want to see 60 pages in a second.

Pages in flipbook made out of graph paper. There are lots and lots of little squares, and each square can contain only one color.

The task of the renderer is to fill the squares in the graph paper. When they are all filled, the frame rendering is finished.

Of course, your computer does not have this graph paper. Instead, the computer includes a memory area called the personnel buffer. Each memory address in the framebuffer is like a square on the graph paper... it corresponds to a pixel on the screen. The browser fills in each cell with numbers that correspond to the values of RGBA (red, green, blue and alpha).

When the screen needs to be updated, it accesses this memory region.

Most computer displays are refreshed 60 times per second. That's why browsers are trying to give 60 frames per second. This means that the browser has all of 16.67 milliseconds for all the work: analysis of CSS styles, layout, rendering and filling of all slots in framebuffer by numbers that correspond to colors. This time interval between two frames (16,67 MS) is called the budget frame.

You may have heard how sometimes people mention dropped frames. Missed frame is when the system does not fit in the budget. Display trying to get a new frame from the frame buffer before the browser has finished work on his display. In this case, the display again shows the old version of the frame.

Missed frames can be compared to torn from the pages of a flipbook. The animation starts to freeze and twitch, because you have lost the intermediate from the previous page to the next.

So you need to have time to put all the pixels in the HR buffer before the display again to check it out. Look at how the browser has used to cope with this and how technology has changed over time. Then we can figure out how to speed up the process.

the

a brief history of the rendering and layout

note. Rendering and layout is the part where the rendering engines in the browsers most strongly differ from each other. Single-platform browsers (Safari and Edge) work a bit like a multi-platform (Firefox and Chrome).

Even the earliest browsers had performed some optimizations to speed up rendering of pages. For example, when you scroll the page the browser was trying to move already-rendered part of the page, and then draw the pixels on a vacant site.

The process of computing what has changed and then update only the changed elements, or pixels, is called invalidation.

Over time, browsers have begun using more advanced techniques is invalidated, such invalidation rectangles. This evaluates the minimum box around the changed region of the screen and then updates only the pixels within these rectangles.

Here is really greatly reduced the amount of computation if the page is changing only a small number of elements... for example, only a blinking cursor.

But that's not much help if you are changing large parts of the page. For such cases had to invent new technology.

the

the Appearance of layers and layout

Using layers helps a lot when changing large parts of the page... at least in some cases.

Layers in browsers similar to layers in Photoshop or the layers of thin smooth paper that were previously used for drawing cartoons. In General, the various elements of the page you draw on different layers. Then put these layers on top of each other.

For a long time, browsers used layers, but they are not always used to accelerate the rendering. At first they just used to ensure the correct rendering of the elements. They carried out the so-called "positioning context" (stacking context).

For example, if you have on the page a translucent element, then it must be in its own reference context. This means that he has his own layer to be able to mix its color with the color of the underlying element. These layers are discarded as soon as finished rendering the frame. In the next frame layers had to draw again.

But some of the elements on these layers does not change from frame to frame. For example, imagine a normal animation. The background does not change even if moving characters in the foreground. Much better to save a layer with the background and just re-use it.

It did so browsers. They began to save layers, updating only the changed. And in some cases the layers did not change. They just need a little move — for example, if the animation moves across the screen, or in the case of the scroll element.

This process of joint arrangement of layers is called a layout. The linker works with the following objects:

the

the source bitmap background (including a blank window where it should scroll the content) and the actual scrolling of the content;

the target bitmap that shows on the screen.

First, the linker copies the background on the target bitmap.

Then he must find out what part of the scrollable content to show. He will copy this part over target bitmap.

This reduces the volume rendering in the main thread. But the main stream still spend a lot of time on the layout. And there are a lot of processes fighting for resources in the main thread.

I gave this example before: the main flow is similar to full-stack developer. He is responsible for the DOM, layout and JavaScript. And he is also responsible for rendering and layout.

Every millisecond spent in the main thread for rendering and layout — this time taken from JavaScript or layout.

But we have another hardware that sits there and does almost nothing. And it's specially designed for graphic processing. We are talking about a GPU that games from the 90-ies used for fast rendering of frames. And since then, GPUs have become more and more powerful.

the

Layout with hardware acceleration

So the browser developers started to hand the work off to the GPU.

In theory, GPU can transfer two problems:

-

the

- Drawing layers. the

- Layout layers to each other.

The rendering can be difficult to transfer to the GPU. So usually multi-platform browsers leave this task to the CPU.

However, the GPU can very quickly build, and this problem is easy to pin on him.

Some browsers even more boost parallelism by adding the flow of the linker on the CPU. He becomes the Manager of all work in the layout that executes on the GPU. This means that if the main thread busy (e.g., performs JavaScript), the flow Builder is still active and is doing work that is visible to the user, like scrolling content.

That is the whole work on the layout goes from the main thread. However, there remains a lot of things. Every time you need to redraw the layer, it makes the main stream, and then transfers the layer to the GPU.

Some browsers have moved and drawing in additional flow (we are now in Firefox is also working on it). But it is faster to send, and this last piece of the calculation — drawing — directly on the GPU.

the

Rendering with hardware acceleration

So, browsers have started to transfer to the GPU and drawing too.

This transition is still ongoing. Some browsers do all the rendering on the GPU, while in others this is only possible on certain platforms (e.g. on Windows or only on mobile devices).

Rendering on the GPU has led to several consequences. It allowed the CPU to devote time to tasks like JavaScript and layout. Besides, the GPU is much faster to draw pixels than the CPU, so that accelerated the entire process of rendering. Also decreased the amount of data that must be transferred from CPU to GPU.

But maintaining this separation between drawing and layout still requires some spending, even if both processes run on the GPU. This division is also a limit in the options optimizations to speed GPU.

That's where comes in WebRender. It fundamentally changes the way rendering, reducing the difference between the rendering and layout. This allows you to customize the performance of the renderer to the demands of modern web and prepare it for situations that will appear in the future.

In other words, we would not only speed up the rendering of frames... we wanted them to renderring more stable, without slowdown. And even if you want to render a lot of pixels, like the virtual reality helmets WebVR in 4K resolution, we still want smooth playback.

the

Why is the animation so slow in modern browsers?

The above optimization helped in some cases to speed up the rendering. When the page is changed the minimum of elements — for example, flashing of a course, the browser makes the minimum possible amount of work.

After laying out of pages into layers, the number of such "ideal" scenarios increased. If you can just draw some layers, and then just move them relative to each other, the architecture of the "rendering + layout" copes.

But layers have disadvantages. They take up a lot of memory and sometimes can slow down the rendering. Browsers must combine layers where it makes sense... but it's hard to determine exactly where it makes sense and where not.

So if the page moves to many different objects, you will have to create a bunch of layers. The layers takes too much memory and passed to the linker takes too much time.

In other cases, it turns out one layer where there must be some. The single layer will be continuously redrawn and passed to the linker, which then links it, without changing anything.

That is, efforts to render odevautsa: each pixel is processed twice without any necessity. It would be faster just to render the page directly, bypassing the link step.

There are many cases when the layers are just useless. For example, if you have animated background, the entire layer will still have to be redrawn. These layers help only with a small number of CSS properties.

Even if the majority of frames fit in the optimal scenario — that is, take only a small part of the budget of the frame — the motion of objects may still remain intermittent. To perceive by the eye jerks and freezes, enough loss just a couple of frames that fit into the worst-case scenario.

These scripts are called cliffs performance. The app works like normal, until you face one of these worst-case scenarios (like animated backgrounds) — and the framerate suddenly drops to the limit.

But you can get rid of these cliffs.

How to do it? Let us follow the example of game 3D-engine.

the

GPU Usage as the game engine

What if we stop to wonder what layers do we need? If you delete this intermediate stage between the drawing and the layout and just go back to rendering each pixel in each frame?

It may seem a ridiculous idea, but some places use this system. In contemporary video games redrawn every pixel, and they keep the 60 fps is safer than browsers. They do it in an unusual way... instead of creating these rectangles for invalidation and layers that minimize area to redraw, just updated the entire screen.

Whether rendering a web page in this way is much slower?

If we do the rendering on the CPU, Yes. But the GPU is specifically designed for such work.

The GPU is built with maximum parallelism. I was talking about the parallelism in his latest article about Stylo. Thanks to parallel processing computer performs several tasks simultaneously. The number of simultaneous tasks is limited by the number of cores in the processor.

The CPU is usually from 2 to 8 cores, and GPU at least several hundred, and often more than 1000 cores.

However, these kernels work slightly differently. They can't function independently as a core of the CPU. Instead, they usually perform some joint task, running a single instruction on different pieces of data.

This is exactly what we need when filling in pixels. All the pixels can be distributed to different cores. Since the GPU works with hundreds of pixels at a time, then filling the pixels, it performs much faster than the CPU... but only if all cores are loaded with work.

Since the kernel should work on one task at the same time, the GPU has a fairly limited set of steps to perform, and their software interfaces is very limited. Let's see how it works.

The first thing you need to specify the GPU what to render. This means to give them form and instructions for completing them.

To do this, you break the whole drawing into simple shapes (usually triangles). These forms are in 3D space, so that some may obscure others. Then you take the tops of all the triangles and make in the array of their coordinates x, y, z.

Then send the command the GPU to render these shapes (draw call).

From this point, the GPU begins to work. All cores are to perform one task at a time. They will do the following:

-

the

- Determine the angles of all the shapes. This is called shading of vertexes (vertex shading).

the

the - Sets the lines that connect the vertices. You can now determine which of the pixels includes a figure. This is called the rasterization.

the

the - When we know which pixels belong to each figure, you can go through each pixel and assign it a color. This is called shading of the pixels (pixel shading).

The last step is different. To issue specific instructions with a GPU running a special program called "pixel Shader". The shading of pixels is one of the few elements of the functionality of the GPU, which you can program.

Some pixel shaders is very simple. For example, if the entire figure is colored in a single color, the Shader just needs to assign the color to each pixel of the shape.

But there are more complex shaders, for example, in the background image. Here will have to figure out which parts of an image correspond to which pixel. This can be done in the same way as the artist scales the image, increasing or decreasing it... put over the image grid with squares for each pixel. Then take the color samples inside each box and determine the final pixel color. This is called the overlay texture (texture mapping), the image (called texture) is overlaid on the pixels.

The GPU will access to the pixel Shader for every pixel. Different cores work in parallel on different pixels, but all of them need the same pixel Shader. When you instruct the GPU to render a shape object, you simultaneously specify which to use a pixel Shader.

Almost all the web pages different parts of the page require different pixel shaders.

Since the Shader works on all pixels specified in a command for a drawing, you usually need to divide the team into several groups. They are called packages. To the maximum load of all cores, you need to create a small number of packages with a large number of pieces in each of them.

So, the GPU distributes the work of hundreds or thousands of cores. All because of the exceptional overlap in the rendering of each frame. But even with this particular parallelism is still a lot of work. To the formulation of the task must be approached with the mind to achieve decent performance. Here comes in WebRender...

the

As WebRender works with GPU

Let's remember what the steps taken by the browser to render the page. Here, there were two changes.

-

the

- there is no More separation between rendering and layout... both performed at one stage. GPU makes them simultaneously on the basis of commands from the graphics API. the

- Layout now gives us to render a different data structure. It used to be something called a wood frame (or wood rendering in Chrome). And now it transfers the display list (display list).

The display list is a set of high-level rendering instructions. It indicates that you want to render without using the specific instructions for a particular graphics API.

As soon as you want to render something new, the main thread transmits the display list to RenderBackend — WebRender is the code running on the CPU.

Task RenderBackend — get a list of the top-level rendering instructions and convert it into commands for the GPU, which are combined into packages for faster execution.

RenderBackend then transmits these packets into the stream of the linker, which transmits them further to the GPU.

RenderBackend wants the team to the rendering performed on the GPU with maximum speed. This applies to several different techniques.

the

Remove unnecessary shapes from the list (early culling)

The best way to save time — not work at all.

The first thing RenderBackend reduces the display list. It determines which elements of list will be actually displayed on the screen. For this, he looks far from the window in the scrolling list is an element.

If the figure falls within the window, it is included in the display list. But if no part of the figure does not fall here, then it is excluded from the list. This process is called early culling (early culling).

the

to minimize the number of intermediate structures (task tree for rendering)

Now our tree contains only the desired shape. This tree is organized in positional contexts, which we talked about earlier.

Effects like CSS filters and positional contexts somewhat complicate the matter. For example, you have an element with opacity of 0.5, and he has a child element. You may think that all child elements transparent too... but in reality transparent the whole group.

Because of this, you must first bring the band to the texture, with full transparency of each square. Then, placing it in the parent object, you can change the transparency of the entire texture.

Positional contexts can be nested within each other and the parent object may belong to a different positional context. That is, it will need to draw on one of the intermediate texture, and so on.

The allocation of space for these textures is costly. We would like to possible to fit all the objects on the same intermediate structure.

To help the GPU to cope with the task, create a task tree for rendering. It tells which texture you need to create before other textures. Any textures that are independent of others, can be created in the first pass, that is, they can then combine the intermediate structure.

So in the above example with translucent squares we painted the first pass one corner of the square. (Actually a bit more complicated, but the essence of it).

Second pass can duplicate this angle for the entire square and paint over it. Then render the group's opaque squares.

Finally, it remains only to adjust the transparency of the texture and place it in the appropriate place of the final texture that will be displayed.

Building a task tree for rendering, we find the minimum possible number of objects rendering to the screen output. That's good, because I mentioned that the space allocation for these textures is costly.

The task tree also helps to combine tasks in packages.

the

Grouping commands on the render (batch processing)

As we have said, you need to create a small number of packages with a large number of pieces in each of them.

Careful package allows us to greatly speed up the rendering. Need to fit in the package as many objects. Such a requirement is put forward for several reasons.

First, when CPU did not give the GPU a command to render, the CPU is always many other tasks. He needs to take care of things like setting the GPU, loading the Shader program and check for different hardware bugs. All this work accumulates, and while it makes the CPU, GPU can stand.

Secondly, there are certain costs to the state change. For example, between the packages you need to modify the Shader state. On a normal GPU will have to wait until all the kernel finish the job from the current Shader. It's called cleaning the pipeline (draining the pipeline). While the conveyor is not cleaned, the rest of the kernel will be transferred to the standby mode.

Because of this, it is desirable to fill the package as tightly as possible. For a desktop PC it is desirable to leave at least 100 rendering commands for each frame, and each team would be good to put thousands of vertices. So squeeze the maximum out of parallelism.

Look at every passage in the task tree for rendering and which tasks grouped together in one package.

At this time, each type of entities requires a different Shader. For example, there is a Shader borders, text Shader and Shader images.

We believe it is possible to combine many of these shaders that will allow to create more packages, although they are now well grouped.

The task is almost ready to be sent to the GPU. But there is still a bit of work, from which it is possible to get rid of.

the

reducing the work on the shading of the pixels using the passages for opacity and alpha channel (Z-culling)

Most web pages contain many figures overlapping. For example, the text field is on top of a div (with a background) that is on top of body (with another background).

When determining the color of a pixel, the GPU could calculate the pixel color in each figure. But shown is only the top layer. It's called the repaint (overdraw), a waste of GPU time.

So you can first render the top layer. When it's turn to render the pixel for the next figure, we check whether there is already a value at that pixel. If so, the extra work is not performed.

However, there is a small problem. If the figure is translucent, then you need to mix the colors of two shapes. And to make it look right, the rendering should be from the bottom up.

So we divide the work in two passes. First pass through the opacity. Will render the top down all the opaque shapes. Skip rendering of all the pixels which are closed to others.

Then proceed to translucent figures. They are drawn from the bottom up. If a pixel is translucent over opaque, their colors are mixed. If he is behind opaque, it is not calculated.

The division into two passes — opacity and alpha channel — with the further omission of unnecessary calculation of pixels is called Z-culling (Z-culling).

Although this may seem like an easy optimization, here we get a lot of benefits. On a typical web page and significantly reduces the number of pixels to be processed. We are now looking for ways to move more tasks to the passage through the opacity.

At the moment we have prepared the frame. We have done everything possible to remove excess.

the

...And we're ready to render!

Graphics processor is ready to setup and rendering packages.

the

Disclaimer: we do not all have gone on the GPU

The CPU still performs some work on the rendering. For example, we still will render on the CPU the symbols (called glyphs) in the text boxes. There is a possibility to do it on the GPU, but it is difficult to achieve pixel-by-pixel matching with the glyphs that the computer renders in other apps. So people may be confused when the text rendering on the GPU. We're experimenting with moving the rendering of the glyphs on the GPU in the framework of the project Pathfinder.

But now these things are rendered in the bitmap on the CPU. Then they are loaded into texture cache on the GPU. This cache is maintained from frame to frame, because usually change is not happening.

Even though this drawing remains on the CPU, there is still potential for acceleration. For example, when rendering characters in the font we distribute a variety of symbols across all cores. This is done using the same technique that is used Stylo for parallelizing styles... the interception work.

the

Future WebRender

In 2018 we plan to introduce WebRender in Firefox in the composition of the Render Quantum in a few releases after the initial release of Firefox Quantum. After that, existing web pages will work smoothly. And the Firefox browser will be ready for a new generation of high-resolution displays in 4K, since the rendering performance is extremely important when the number of pixels on the screen.

But WebRender is not only useful for Firefox. It is also necessary in our work on WebVR where you want to render different frames to each eye at a speed of 90 FPS at 4K resolution.

The first version WebRender is already available in Firefox if you manually activate the corresponding flag. The work on integration is ongoing, so productivity is not so high as to be in the final release. If you want to watch the development WebRender, follow GitHub repository or Twitter Firefox Nightly where the weekly news for the entire project Render Quantum.

About the author: Lin Clark — engineer of Mozilla Developer Relations group. It works with JavaScript, WebAssembly, Rust and Servo, and programmer likes to draw pictures.

Комментарии

Отправить комментарий