What is big data part 2

first part this series of articles, you learned about data and how you can use computers to extract meaning from large blocks of such data. You've even seen something similar on large data Amazon.com the mid-nineties, when the company launched the technology to monitor and record in real time everything that the many thousands of customers simultaneously made on their website. Pretty impressive, but to call it big data may be a stretch, chubby data is more appropriate. Organizations like the national security Agency USA (NSA) and GCHQ in the UK (GCHQ) have collected large data at a time under espionage operations, recording digital messages, although they have not had an easy way to decode them and make sense of it. Library of governmental records were filled with incoherent data sets.

what did Amazon.com was a simpler time. The level of satisfaction of their clients could be easily identified, even if it covered all the tens of thousands of products and millions of consumers. Actions that the client can make in the store, be it real or virtual, not so much. The customer can see what to access, to request additional information, to compare products, to put something in the basket, to buy or to leave. It was all in the limits of relational databases, where relationships between all types of actions it is possible to set in advance. And they must be pre-defined with relational databases the problem — they are not easily extensible.

in Advance to know the structure of such a database — how to list all potential friends of your unborn child... for life. It should list all the friends of the unborn, because once the list is compiled, any addition of new positions will require major surgery.

find links and patterns in data requires a more flexible technology.

the First major technological challenge of the Internet of the 90s to handle unstructured data. In simple words, data that surround us every day before was not seen as something that can be stored in a database. The second task is very cheap, the processing of such data, since their volume was high, but the exhaust is low.

If you need to listen to a million telephone conversations in the hope of capturing at least one mention of al-Qaeda, you will either need a significant budget or a new, very cheap way of handling all this data.

Commercial Internet then had two very similar tasks: finding stuff on the web and the fee is for the opportunity to find something.

Task search. By 1998, the total number of web-sites had reached 30 million (over two billion). 30 million places, each of which contains many web pages. Pbs.org for example, is a website containing over 30,000 pages. Each page contains hundreds or thousands of words, images and information blocks. Something to find on the web, you need to index the entire Internet. Here is already great data!

Task search. By 1998, the total number of web-sites had reached 30 million (over two billion). 30 million places, each of which contains many web pages. Pbs.org for example, is a website containing over 30,000 pages. Each page contains hundreds or thousands of words, images and information blocks. Something to find on the web, you need to index the entire Internet. Here is already great data!

To index, you first need to read the entire web, all 30 million hosts in 1998 and 2 billion today. It is made using the so-called spiders (spiders) or search robots — computer programs that systematically search the Internet for new web pages, read them, and then copy and drag back to index their content. All search engines use web crawlers, and they must work continuously to update the index to keep it up to date with the advent of new web pages, their change or disappearance. Most search engine maintains an index not only of the current web, but, as a rule, and all the old versions, so when you search for earlier versions can be back in time.

In the first decade of the Internet there are dozens of search engines, but four was the most important, and each had its own technical approach for obtaining meaningful values from them all in these pages. Alta Vista was the first real search engine. She appeared in the laboratory, Digital Equipment Corporation, in Palo Alto. Digital Equipment Corporation actually was a computer science laboratory at XEROX PARC, transported almost in its entirety, for a distance of two miles Bob Taylor, who built both of them and hired most of the old staff.

Alta Vista used linguistic tool for searching the web index. And it has indexed all the words in the document, for example, in a web page. If you've given him a lookup "gold doubloons", Alta Vista has scanned its index for documents containing the words "search" and gold "doubloons" and displayed a list of pages ordered by number of references requested words.

Alta Vista used linguistic tool for searching the web index. And it has indexed all the words in the document, for example, in a web page. If you've given him a lookup "gold doubloons", Alta Vista has scanned its index for documents containing the words "search" and gold "doubloons" and displayed a list of pages ordered by number of references requested words.

But even then shit the Internet was a lot, so Alta Vista has indexed all this shit and didn't know how to distinguish the good from the bad. It was just words in the end. Of course, bad documents are often raised up, and the system was easy to cheat by inserting hidden words to distort search. Alta Vista couldn't distinguish real words from the hidden.

At the time, as advantage of Alta-Vista was the use of powerful computers DEC (that was the important point, DEC was the leading manufacturers of computer equipment), the advantage of Yahoo! was the use of people. The company hired workers to ensure that they all day literally was looking through web pages, index them manually (and very carefully), and then pointed out the most interesting on each topic. If you have a thousand man-indexers, and each can index 100 pages a day, Yahoo was able to index 100 000 pages a day or about 30 million a year — the whole universe of the Internet in 1998. It worked to cheer on the world wide web, while the web has grown to intergalactic scales and was beyond the control of Yahoo. Early system Yahoo with their human resources were not scaled.

At the time, as advantage of Alta-Vista was the use of powerful computers DEC (that was the important point, DEC was the leading manufacturers of computer equipment), the advantage of Yahoo! was the use of people. The company hired workers to ensure that they all day literally was looking through web pages, index them manually (and very carefully), and then pointed out the most interesting on each topic. If you have a thousand man-indexers, and each can index 100 pages a day, Yahoo was able to index 100 000 pages a day or about 30 million a year — the whole universe of the Internet in 1998. It worked to cheer on the world wide web, while the web has grown to intergalactic scales and was beyond the control of Yahoo. Early system Yahoo with their human resources were not scaled.

Soon came the Excite, it was based on a linguistic trick. The trick is that the system was looking for not what man wrote and what he really needed, because not everyone is able to accurately formulate a request. Again, this task was formed in conditions of lack of computing power (this is the main point).

Soon came the Excite, it was based on a linguistic trick. The trick is that the system was looking for not what man wrote and what he really needed, because not everyone is able to accurately formulate a request. Again, this task was formed in conditions of lack of computing power (this is the main point).

Excite used the same index as Alta Vista, but instead of counting how often the words "gold" or "doubloon", six employees Excite used the approach based on the geometry of vectors, where each request is defined as a vector consisting of terms of queries and their frequencies. A vector is just an arrow in space, with starting point, direction and length. In the universe Excite the starting point was the total lack of searched words (zero "search," zero "gold" and zero "doubloons"). A search vector is started from point zero-zero-zero with these three search terms, and then expanded to, say, two items of "search" because so many times the word "search" found in the target document, thirteen pieces of "gold" and maybe five or "doubloons". It was a new way of indexing the index and the best way to describe the stored data, as from time to time it led to results that have not used any of the search words directly — what Alta Vista could not do.

the Web index Excite wasn't just a list of words and their frequency of use, it was a multidimensional vector space in which the search was considered as the direction. Each search was one thorn in the hedgehog data and brilliant strategy Excite (genius Graham Spencer) was to capture not one, but all the thorns in the neighborhood. Encompassing not only fully comply with the conditions of the query documents (like Alta Vista), but all are similar in the terms formulated in the multidimensional vector space, Excite was a more useful search tool. He worked on the index for processing used the math of vectors and, more importantly, almost did not require calculations to get a result, because the computation has already been made in the indexing process. Excite gave better results and faster using a primitive iron.

Google has made two improvements in search — PageRank and cheap iron.

Google has made two improvements in search — PageRank and cheap iron.

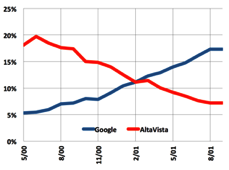

Advanced vector approach helped Excite the desired output the desired results, but even its results were often useless. Larry page of Google invented a way of assessing the utility with ideas based on trust, which led to greater accuracy. A Google search at the beginning of the used linguistic methods such as Alta Vista, but then added an additional filter to PageRank (named after Larry page, noticed that?), going out to the first results and lined them up on the number of pages with which they were associated.  the Idea was that the more page authors bother to give a link to this page, the more useful (or at least interesting, even in a bad way) was the page. And they were right. Other approaches began to wither away, but Google quickly came to the trend with its PageRank patent.

the Idea was that the more page authors bother to give a link to this page, the more useful (or at least interesting, even in a bad way) was the page. And they were right. Other approaches began to wither away, but Google quickly came to the trend with its PageRank patent.

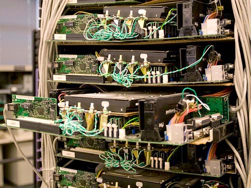

But there was another detail that Google implemented differently. Alta Vista came from Digital Equipment and worked on a huge cluster of VAX minicomputers from DEC. Excite used is not inferior to them in power iron UNIX from Sun Microsystems. Google was started only using free software, open source, computers a little more powerful than personal. In General, they were less than PC, because the home-made computers Google had no buildings, no power supply (they were fed, literally, from car batteries and charged from a car charger). The first modification was bolted to the walls, and they later stuffed with racks like trays of fresh pastries in industrial furnaces.

But there was another detail that Google implemented differently. Alta Vista came from Digital Equipment and worked on a huge cluster of VAX minicomputers from DEC. Excite used is not inferior to them in power iron UNIX from Sun Microsystems. Google was started only using free software, open source, computers a little more powerful than personal. In General, they were less than PC, because the home-made computers Google had no buildings, no power supply (they were fed, literally, from car batteries and charged from a car charger). The first modification was bolted to the walls, and they later stuffed with racks like trays of fresh pastries in industrial furnaces.

Amazon has created a business case for big data and has developed a clumsy way to implement it on hardware and software, have not adapted to big data. Search companies greatly expand the practical size of data sets, while mastering the indexing. But the real big data couldn't work with the index, they would need the actual data, and this required either very large and expensive computers, like Amazon, or a way to use a cheap PC that looks like a giant computer in Google.

the dot-com Bubble. Let us imagine the euphoria and childish Internet of the late 1990s, during the period of the so-called dotcom bubble. It was clear to all, from bill gates that the future of personal computers and, possibly, business was the Internet. Therefore, venture capitalists invested billions of dollars into Internet start-UPS, not much thinking about how these companies actually will make money.

the Internet was seen as a huge area where it was important to create as large a company as possible, as quickly as possible, and to capture and keep a share of the business regardless of whether that company has a profit or not. For the first time in the history of the company began to enter the stock market, not earning a penny of profit for all time of their existence. But it was perceived as the norm — the profit will appear in the process.

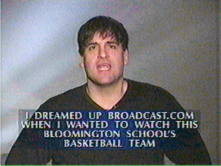

the Result of all this irrational exuberance was a rebirth of ideas, most of which are not realized would be at other times. Broadcast.com for example, was conceived for broadcast television via dial-up on a huge audience. The idea has not worked, but Yahoo! still bought it for $5.7 billion in 1999 that made Mark Cuban a billionaire, which he remains today.

We believe that Silicon valley is built on Moore's law, whereby computers are constantly cheaper and more powerful, but the era of dotcom just pretended to have used this law. In fact it was built on the sensation.

some time it didn't matter because venture capitalists, and then investors on wall street were willing to compensate for the difference, but in the end it became obvious that Alta Vista with its huge data centers will not be able to profit only from search. As and Excite, and every other search engine at that time.

the dot coms collapsed in 2001 due to the fact that startups run out of money gullible investors that maintained their advertising company on the super bowl. When the last dollar the last fool was spent on the latest office chair from Herman Miller, almost all investors have already sold their shares and left. Thousands of companies have collapsed, some of them for the night. Amazon, Google and a few others survived thanks to the fact that he understood how to make money on the Internet.

Amazon.com distinguished by the fact that the business Jeff Bezos was electronic Commerce. And it was a new kind of trade which was to replace the bricks electrons. For Amazon, the savings on real estate and wages have played a major role as the company's profit is measured in dollars per transaction. And for the search engine — the first application of big data and the real tool of the Internet advertising market has paid off, worth less than a cent per transaction. The only way to do this was to understand how to break Moore's law and further reduce the cost of data processing, and at the same time to associate a search engine with ads and increase those sales. Google coped with both tasks.

It's time for the Miraculous Second Coming of big data, which completely explains why Google today is worth $479 billion, and most other search companies long dead.

GFS, Map Reduce and BigTable. As page and Brin were the first who realized that to create its own super-cheap servers — is the key to the survival of the company, Google had to build a new data processing infrastructure to make thousands of cheap PC look and work as one supercomputer.

When other companies seem to become accustomed to the losses in the hope that Moore's law at some point will work and turn them into profitable, Google has found a way to make your profitable search engine in the late 90's. this included the invention of new machinery, software and advertising technologies. The activities of Google in these areas directly, and brought us into the world of those of big data, the formation of which can be seen today.

Let's first look at the scale of today's Google. When you are searching for something using their search engine, you will first interact with three million web servers in hundreds of data centers around the world. All of these servers send images of pages on your computer screen, on average, 12 billion pages per day. The web index is stored additionally to two million servers, and three million servers contain the actual documents integrated into the system. Together, the eight million servers, without regard to YouTube.

Three key components in "penny" the architecture of Google, it's their file system, or GFS, which allows all these millions of servers to handle the fact that they consider ordinary memories. Of course, it's not just memory, and it crushed copies, called fragments, but the whole trick in their community. If you change the file, it needs to be changed on all servers at the same time, even those who are thousands of miles away from each other.

Turns out, a huge problem for Google is the speed of light.

MapReduce distributes major task of hundreds or thousands of servers. He gives the task of multiple servers and then collects the number of answers in a single.

BigTable is a database of Google, which contains all the data. It is not relational, because relational cannot work on this scale. It's old-fashioned flat database, which, like GFS, have to be coherent.

But Google to achieve their financial goals it wasn't enough.

Big brother started out as a publicist. Google was easy enough to make processing cheaper, to approach the profit margins Amazon.com. The remaining difference between the cents and dollar per transaction can be met if we could find a more profitable way of selling online advertising. Google did this through the effective indexing of the users as he previously did with the Internet.

Big brother started out as a publicist. Google was easy enough to make processing cheaper, to approach the profit margins Amazon.com. The remaining difference between the cents and dollar per transaction can be met if we could find a more profitable way of selling online advertising. Google did this through the effective indexing of the users as he previously did with the Internet.

by Studying our behavior and anticipating our customers needs, Google has offered us is that we would be clicked with a probability of 10 or 100 times more, which increased the probable income with Google such click 10 or 100 times.

Now we, finally, say the scale of big data.

did the Google tools with internal or external world doesn't matter, it worked the same way. And in contrast to the SABRE system, for example, it was General-purpose tools — they can be used for almost any kind of task that is applied to almost any kind of data.

GFS and MapReduce first did not put any restrictions on database size or the scalability of the search. All that was needed is more than the average iron, which would gradually lead to millions of cheap servers, dividing the task among themselves. Google continuously adds servers to its network, but does it makes sense because if the datacenter goes offline entirely, the servers after damage are not replaced. It's too hard. Just leave them dead in the racks, and MapReduce will handle the processing of data, avoiding non-production servers and using existing nearby.

Google has published a paper on the GFS in 2003 and MapReduce in 2004. One of the magical highlights of this business: they didn't even try to keep secret their methods, although it is likely that the others ever came to such decisions yourself.

Yahoo! Facebook and other rapidly reproduced the open version of Map Reduce called Hadoop (in honor of the toy elephant — elephants never forget). This allowed for what we today call cloud computing. It's just a paid service: allocate your tasks among dozens or hundreds of rented computers, rented sometimes for a few seconds, and then combine multiple responses into a single coherent solution.

Big data has made cloud computing a necessity. Today it is difficult to separate these two concepts.

Not just big data, but social networking has become possible thanks to MapReduce and Hadoop, because they are made economically viable opportunity to a billion users Facebook to create their dynamic web pages for free and the companies get profit only from advertising.

Even Amazon has moved to Hadoop, and today there is virtually no limit to the growth of their network.

Amazon, Facebook, Google and NSA can't operate today without MapReduce or Hadoop, which forever destroyed the necessity of the index. Search today is done not by index, but by raw data, which change from minute to minute. More precisely, the index is updated from minute to minute. It does not matter.

with these tools, Amazon and other companies provide cloud computing services. Armed only with a credit card smart programmers within a few minutes to use the power of one, thousands, or ten thousands of computers, and use them to solve some tasks. That's why Internet start-UPS no longer buy servers. If you want to briefly get the computing resources are larger than all of Russia, you will need a card to pay.

If Russia wants to get more computing resources than Russia, it can also use your credit card.

Sharing your secrets, Google got a smaller piece of a larger pie.

so, in a nutshell, and there were Large Data. Google tracks your every mouse click, and a billion or more clicks from other people. Similarly, the Facebook and Amazon, when you are on their website or use Amazon Web Services on any other website. And they cover one third data processing of the whole Internet.

Think for a moment what value it has for society. If in the past businesses used marketing research and thinking about how to sell goods to consumers, now they can use big data to know about your desires and how you sell it. That's why I long time saw ads online about expensive espresso machines. And when I finally bought it, is almost immediately stopped because the system found out about my purchase. She moved on to trying to sell me coffee beans and, for some reason, adult diapers.

once, When the Google server for each Internet user. They and other companies will collect more types of data about us to better predict our behavior. Where it leads depends on who will use these data. It can turn us into perfect consumers or caught terrorists (or more successful terrorist is another argument).

there is No problem that would be insurmountable great.

And for the first time, thanks to Google, the NSA and GCHQ, finally, there are search tools on the stored intelligence information, and they can find all the bad guys. Or, maybe forever enslave us.

(Translated by Natalia bass)

Комментарии

Отправить комментарий