Why LLVM can cause a never called function?

whatever you say your a dragon, he lied. Dragons liar. You don't know what awaits you on the other side.

Michael Swanwick. "The daughter of the iron dragon"

Not so long ago habré published a post titled "How can called never the called function?". The conclusions of the article is simple: in the case of undefined behaviour, the compiler is entitled to take any action, even if they are completely unexpected. However, I was interested in the mechanism of this optimization. The results of their small study I want to share with dear community Habra.

I recall in a nutshell, what was the point. The following source code function EraseAll should not be called from main, and it really is not called when compiled with -O0, but suddenly invoked with optimization -O1 and above.

the

#include <cstdlib>

typedef int (*Function)();

static Function Do;

static int EraseAll() {

return system("rm-rf /");

}

void NeverCalled() {

Do = EraseAll;

}

int main() {

return Do();

}This is explained as follows: in the above code, the Do variable is a function pointer, and is initially null. When we try to call a function on a null pointer, the program behavior may be undefined (undefined behaviour, UB) and the compiler is entitled to optimize UB as it is more convenient. In this case, the compiler has done assign Do = EraseAll.

Why he did that, we will try to sort out now. Throughout the further text as compiler used LLVM and Clang version 5.0.0.

To begin with, what view the IR code with optimization -O0 and -O1. Change the source to make it less dramatic:

the

#include <stdio.h>

typedef int (*Function)();

static Function Do;

static int PrintHello() {

return printf("hello world\n");

}

void NeverCalled() {

Do = PrintHello;

}

int main() {

return Do();

}And will compile the IR code with -O0 (debana information omitted for clarity):

the

; ModuleID = 'test.c'

source_filename = "test.c"

target datalayout = "e-m:e-i64:64-f80:128-n8:16:32:64-S128"

target triple = "x86_64-unknown-linux-gnu"

@Do = internal global i32 (...)* null, align 8

@.str = private unnamed_addr constant [13 x i8] c"hello world\0A\00", align 1

; Function Attrs: nounwind uwtable noinline optnone

define void @NeverCalled() #0 {

entry:

store i32 (...)* bitcast (i32 ()* @PrintHello to i32 (...)*), i32 (...)** @Do, align 8

ret void

}

; Function Attrs: nounwind uwtable noinline optnone

define i32 @main() #0 {

entry:

%retval = alloca i32, align 4

store i32 0, i32* %retval, align 4

%0 = load i32 (...)*, i32 (...)** @Do, align 8

%call = call i32 (...) %0()

ret i32 %call

}

; Function Attrs: nounwind uwtable noinline optnone

define internal i32 @PrintHello() #0 {

entry:

%call = call i32 (i8*, ...) @printf(i8* getelementptr inbounds ([13 x i8], [13 x i8]* @.str, i32 0, i32 0))

ret i32 %call

}

declare i32 @printf(i8*, ...) #1And -O1:

the

; ModuleID = 'test.ll'

source_filename = "test.c"

target datalayout = "e-m:e-i64:64-f80:128-n8:16:32:64-S128"

target triple = "x86_64-unknown-linux-gnu"

@.str = private unnamed_addr constant [13 x i8] c"hello world\0A\00", align 1

; Function Attrs: nounwind uwtable noinline optnone

define void @NeverCalled() local_unnamed_addr #0 {

entry:

ret void

}

; Function Attrs: nounwind uwtable noinline optnone

define i32 @main() local_unnamed_addr #0 {

entry:

%retval = alloca i32, align 4

store i32 0, i32* %retval, align 4

%call = call i32 (...) bitcast (i32 ()* @PrintHello to i32 (...)*)()

ret i32 %call

}

; Function Attrs: nounwind uwtable noinline optnone

define internal i32 @PrintHello() unnamed_addr #0 {

entry:

%call = call i32 (i8*, ...) @printf(i8* getelementptr inbounds ([13 x i8], [13 x i8]* @.str, i32 0, i32 0))

ret i32 %call

}

declare i32 @printf(i8*, ...) local_unnamed_addr #1You can compile the executable files and make sure that in the first case an error occurs during the segmentation, and the second displays "hello world". For other optimization options the result is the same as with -O1.

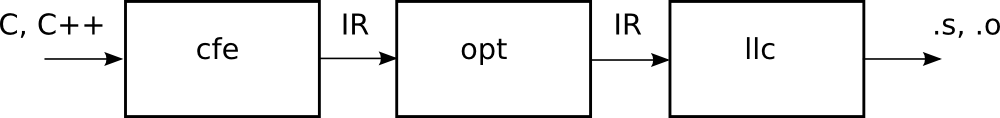

Now we find the part of code of the compiler that performs this optimization. Recall that in the architecture of the LLVM frontend does not optimization itself, i.e. cfe (Clang Frontend) always generates code without optimization, we see the option for -O0 and optimize utility will perform opt:

At-O1 we have the following optimization passes:

Impressionable please do not watch

Disabling the passages one by one, find the sought, it globalopt. We can only leave this optimization pass to ensure that he, and no other breeds we need code. Its source is in the file /lib/Transforms/IPO/GlobalOpt.cpp. With source code you can find in the repository, the LLVM, and to bring it fully here, I will not, restricting only important for understanding its functions.

Let's see what makes this passage optimization. For starters, it implements the runOnModule method, i.e. at work, he sees and optimizes the complete module (which, incidentally, is logical in this case). Optimization directly deals with the function of optimizeGlobalsInModule:

the

Try to describe in words what this function does. For each global variable in a module, it requests the object Comdat.

In LLVM Comdat represented by enumeration:

the

and the class Comdat actually is a pair (Name, SelectionKind). (it is actually more difficult.) All the variables that for some reason cannot be removed and placed in many NotDiscardableComdats. With global functions and aliases go the same way — that can't be removed, placed in NotDiscardableComdats. When called by a separate function optimization, global engineers, global functions, global variables, global variables, and global destructors. Optimization continues in a cycle until, until no optimization. On each iteration of the loop resets many NotDiscardableComdats.

Let's see what objects of the following contains our test source code.

Global variables:

the

(looking ahead, we say that the first variable optimizer will remove the first iteration)

Features:

the

Please note that printf only declared (declare) but not defined (define).

Global aliases are not available.

Let's take the example of this optimization pass, consider, how come such a result. Of course, to understand fully all the variants of optimization even in one passage — a very big task since it has a lot of different special cases of optimizations. Let's concentrate on our example, simultaneously examining the functions and data structures that are important for the understanding of the work of the optimization pass.

Initially, the optimizer makes different little interest in this case inspection and viswajeet function processInternalGlobal, which tries to optimize the global variables. This function is also quite complex and does a lot of different things, but we are interested in one:

the

Information about what global variable is assigned a value once and once only, is extracted from the structure of GS (GlobalStatus). This structure is filled in by the caller:

the

Here we see another interesting fact: objects whose names start with "llvm." are not subject to optimization (as they are system calls of the runtime llvm). And, in any case, variable names in the language of the LLVM IR can contain periods (and even consist of one point with a prefix of @ or %). Function analyzeGlobal is calling the LLVM API and we will not consider its inner workings. The structure of the GlobalStatus is to dwell, as it contains very important information to optimization passes.

the

It is, perhaps, to explain why "if we found that there is a capture variable addresses, no information of this structure will not be reliable." In fact, if we took the address of a global variable, and then write something to this address, not by name, to trace it will be extremely difficult, and it is better to leave these variables as is, without trying to optimize.

So, we get into the function optimizeOnceStoredGlobal, in the arguments which is passed a variable ( GV) and save the value (StoredOnceVal). Here they are:

the

Further, the values are deleted bitcast insignificant, and the variable is checked by the following condition:

the

that is, the variable must initializelayout a null pointer. If so, create a new variable, SOVC, corresponding to the value StoredOnceVal given to the type GV:

the

Here getBitCast method that returns the command bitcast leading types in LLVM IR.

After this function is called OptimizeAwayTrappingUsesOfLoads. It is passed the global variable GV and LV constant.

Directly optimized by the function of OptimizeAwayTrappingUsesOfValue(Value *V, Constant *NewV).

For each use of a variable:

the

if it is a Load command, replacing it with the operand with a new value:

the

If the variable is used in a function call or invoke (which is what happens in our example), create a new function, replacing its argument with a new value:

the

All other function arguments are just copied.

Have similar replacement algorithms for GEP and Cast instructions, but in our case this is going on.

Further actions are as follows: review all usage of the global variable, trying to remove all but the assignment value. If this is successful, we can remove a variable Do.

So, we briefly reviewed the work of the optimization pass on the LLVM specific example. In principle, nothing complicated here, but requires great accuracy in the programming to anticipate all possible combinations of commands and of variable types. Of course, all this should be covered by tests. Study the source code of the LLVM optimizers will help you to write your optimize improve the code for any specific occasions.

the

Here are a few examples of how LLVM is able to optimize the code. These examples are not relevant to the example we just did, and made in other optimization passes, however, they are quite unusual and interesting.

Consider the code that sums numbers from 1 to n-1:

the

Compiled with -O1:

the

Suddenly, no cycle, but there is a wonderful type variables i33 (that is, 33-bit integer). How did this happen? LLVM transformed row sum in the formula: (n-1)*(n-2)/2 + n — 1. As in the calculation of the intermediate variables may overflow a 32-bit mesh LLVM inserted a variable of type i33. Note that he did this by analyzing unoptimized assembler code, but it is quite difficult. Under the spoiler is given neoptimizirovannost code for the same function, which directly believes in a loop:

Even more interesting to see what happens with this example in the backend. Variable i33 is converted to i64, and if your processor is 32-bit, generates a sequence of commands for multiplication and addition 64-bit integers in a 32-bit system. Even more interesting, if the original example to change the data type to long. Then the argument and the return value will be of type i64, and intermediate variables will be the type of i65!

Suppose we decided to write a function that change the sign of the float, changing the 31-th bit of the binary representation of the float:

the

When compiling for x86_64, there is nothing particularly interesting:

the

But if we compile for the ARM 64 (AARCH64):

the

LLVM recognizes the change in the 31st bit command fneg, izmenenyaya sign float. For comparison, GCC does not know how, giving the "literal" option.

GCC 6.3 (ARM 64):

the

This is an example of target-specific optimization, which is done in the backend and not in the utility opt.

For this example, we want to say a few words. Such actions with pointers break the rule of strict aliasing, which can lead to neopredelennogo behavior when compiling with-strict-aliasing some compilers on some platforms (in fact, vanishingly small number of cases). For this example, the error occurs when compiling with gcc4.4.7 -m32 -O2, and disappears in more recent versions of gcc. However, I put in the reference list at the end of a link to an interesting lecture on aliasing.

Another example of target-specific optimization, this time to x86-64, and more specifically, to architecture Haswell.

Write a function count one bits in word:

the

Compiled with -O1 -march=haswell:

the

The whole function fits into a single popcnt instruction, which counts the number of ones in the word.

Look at IR

the

Here we see that you are using the built-in function llvm.ctpop.i32. Put already front-end using high-level information about the code and the backend for this architecture knows about this function and can replace it with a special command.

the

http://www.drdobbs.com/architecture-and-design/the-design-of-llvm/240001128 — Chris Lattner, "The Design of LLVM"

https://youtu.be/bSkpMdDe4g4 — Matt Godbolt, "What has my compiler done for me lately?"

https://youtu.be/ACW-7dktyDk Dmitry Kashitsyn "Trolley from loaf: aliasing and vectorization in LLVM"

Article based on information from habrahabr.ru

-targetlibinfo -tti -tbaa -scoped-noalias -assumption-cache-tracker -profile-summary-info-forceattrs -inferattrs -ipsccp -globalopt -domtree -mem2reg -deadargelim -domtree -basicaa -aa-instcombine -simplifycfg -basiccg -globals-aa-prune-eh -always-inline-functionattrs -domtree -sroa -basicaa -aa-memoryssa -early-cse-memssa -speculative-execution-domtree -basicaa -aa -lazy-value-info -jump-threading -lazy-value-info-correlated-propagation -simplifycfg -domtree -basicaa -aa-instcombine -libcalls-shrinkwrap -loops -branch-prob -block-freq-pgo-memop-opt-domtree -basicaa -aa-tailcallelim -simplifycfg -reassociate -domtree -loops -loop-simplify-lcssa-verification -lcssa -basicaa -aa-scalar-evolution -loop-rotate-licm -loop-unswitch -simplifycfg -domtree -basicaa -aa-instcombine -loops -loop-simplify-lcssa-verification -lcssa -scalar-evolution-indvars -loop-idiom -loop-deletion -loop-unroll -memdep -memcpyopt -sccp -domtree -demanded-bits-bdce -basicaa -aa-instcombine -lazy-value-info -jump-threading -lazy-value-info-correlated-propagation -domtree -basicaa -aa-memdep -dse -loops -loop-simplify-lcssa-verification -lcssa -aa-scalar-evolution-licm -postdomtree -adce -simplifycfg -domtree -basicaa -aa-instcombine -barrier -basiccg -rpo-functionattrs -globals-aa-float2int -domtree -loops -loop-simplify-lcssa-verification -lcssa -basicaa -aa-scalar-evolution -loop-rotate -loop-accesses -lazy-branch-prob -lazy-block-freq-opt-remark-emitter -loop-distribute-branch-prob -block-freq-scalar-evolution-basicaa -aa -loop-accesses -demanded-bits-lazy-branch-prob -lazy-block-freq-opt-remark-emitter -loop-vectorize -loop-simplify-scalar-evolution -aa -loop-accesses -loop-load-elim -basicaa -aa-instcombine -latesimplifycfg -domtree -basicaa -aa-instcombine -loops -loop-simplify-lcssa-verification -lcssa -scalar-evolution -loop-unroll -instcombine -loop-simplify-lcssa-verification -lcssa -scalar-evolution-licm -alignment-from-assumptions -strip-dead-prototypes -domtree -loops -branch-prob -block-freq -loop-simplify-lcssa-verification -lcssa -basicaa -aa-scalar-evolution-branch-prob -block-freq -loop-sink -lazy-branch-prob -lazy-block-freq-opt-remark-emitter -instsimplify -simplifycfg -verifyDisabling the passages one by one, find the sought, it globalopt. We can only leave this optimization pass to ensure that he, and no other breeds we need code. Its source is in the file /lib/Transforms/IPO/GlobalOpt.cpp. With source code you can find in the repository, the LLVM, and to bring it fully here, I will not, restricting only important for understanding its functions.

Let's see what makes this passage optimization. For starters, it implements the runOnModule method, i.e. at work, he sees and optimizes the complete module (which, incidentally, is logical in this case). Optimization directly deals with the function of optimizeGlobalsInModule:

the

static bool optimizeGlobalsInModule(

Module &M, const DataLayout &DL, TargetLibraryInfo *TLI,

function_ref<DominatorTree &(Function &)> LookupDomTree) {

SmallSet < const Comdat *, 8> NotDiscardableComdats;

bool Changed = false;

bool LocalChange = true;

while (LocalChange) {

LocalChange = false;

NotDiscardableComdats.clear();

for (const GlobalVariable &GV : M. globals())

if (const Comdat *C = GV.getComdat())

if (!GV.isDiscardableIfUnused() || !GV.use_empty())

NotDiscardableComdats.insert(C);

for (Function &F : M)

if (const Comdat *C = F. getComdat())

if (!F. isDefTriviallyDead())

NotDiscardableComdats.insert(C);

for (GlobalAlias &GA : M. aliases())

if (const Comdat *C = GA.getComdat())

if (!GA.isDiscardableIfUnused() || !GA.use_empty())

NotDiscardableComdats.insert(C);

// Delete functions that are trivially dead, ccc - > fastcc

LocalChange |=

OptimizeFunctions(M, TLI, LookupDomTree, NotDiscardableComdats);

// Optimize global_ctors list.

LocalChange |= optimizeGlobalCtorsList(M, [&] (Function *F) {

return EvaluateStaticConstructor(F, DL, TLI);

});

// Optimize non-address-taken globals.

LocalChange |= OptimizeGlobalVars(M, TLI, LookupDomTree,

NotDiscardableComdats);

// Resolve aliases, when possible.

LocalChange |= OptimizeGlobalAliases(M, NotDiscardableComdats);

// Try to remove trivial global destructors if they are not removed

// already.

Function *CXAAtExitFn = FindCXAAtExit(M, TLI);

if (CXAAtExitFn)

LocalChange |= OptimizeEmptyGlobalCXXDtors(CXAAtExitFn);

Changed |= LocalChange;

}

// TODO: Move all global ctors functions to the end of the module for code

// layout.

return Changed;

}Try to describe in words what this function does. For each global variable in a module, it requests the object Comdat.

What is Comdat

Comdat section is a section in the object file that smoky placed objects that can be duplicated in other object files. Each object has information for the linker, indicating that he should do in case of duplicates. The options are as follows: Any — anything, ExactMatch — the duplicates must be identical, otherwise an error occurs, the Largest — to take the object with the highest value, NoDublicates — should not be duplicate, SameSize duplicates must have the same size, otherwise an error occurs.

In LLVM Comdat represented by enumeration:

the

enum SelectionKind {

Any, ///< The linker may choose any COMDAT.

ExactMatch, /// < The data referenced by the COMDAT must be the same.

Largest, ///< The linker will choose the largest COMDAT.

NoDuplicates, ///< No other Module may specify this COMDAT.

SameSize, /// < The data referenced by the COMDAT must be the same size.

};and the class Comdat actually is a pair (Name, SelectionKind). (it is actually more difficult.) All the variables that for some reason cannot be removed and placed in many NotDiscardableComdats. With global functions and aliases go the same way — that can't be removed, placed in NotDiscardableComdats. When called by a separate function optimization, global engineers, global functions, global variables, global variables, and global destructors. Optimization continues in a cycle until, until no optimization. On each iteration of the loop resets many NotDiscardableComdats.

Let's see what objects of the following contains our test source code.

Global variables:

the

1. @Do = internal global i32 (...)* null, align 8

2. @.str = private unnamed_addr constant [13 x i8] c"hello world\0A\00", align 1(looking ahead, we say that the first variable optimizer will remove the first iteration)

Features:

the

define void @NeverCalled()

define i32 @main()

define internal i32 @PrintHello()

declare i32 @printf(i8*, ...)Please note that printf only declared (declare) but not defined (define).

Global aliases are not available.

Let's take the example of this optimization pass, consider, how come such a result. Of course, to understand fully all the variants of optimization even in one passage — a very big task since it has a lot of different special cases of optimizations. Let's concentrate on our example, simultaneously examining the functions and data structures that are important for the understanding of the work of the optimization pass.

Initially, the optimizer makes different little interest in this case inspection and viswajeet function processInternalGlobal, which tries to optimize the global variables. This function is also quite complex and does a lot of different things, but we are interested in one:

the

if (GS.StoredType == GlobalStatus::StoredOnce && GS.StoredOnceValue) {

...

// Try to optimize the global variables, about which we know that they are assigned

// value only once, not counting the initial value.

if (optimizeOnceStoredGlobal(GV, GS.StoredOnceValue, GS.Ordering, DL, TLI))

return true;

...

}Information about what global variable is assigned a value once and once only, is extracted from the structure of GS (GlobalStatus). This structure is filled in by the caller:

the

static bool

processGlobal(GlobalValue &GV, TargetLibraryInfo *TLI,

function_ref<DominatorTree &(Function &)> LookupDomTree) {

if (GV.getName().startswith("llvm."))

return false;

GlobalStatus GS;

if (GlobalStatus::analyzeGlobal(&GV, GS))

return false;

...Here we see another interesting fact: objects whose names start with "llvm." are not subject to optimization (as they are system calls of the runtime llvm). And, in any case, variable names in the language of the LLVM IR can contain periods (and even consist of one point with a prefix of @ or %). Function analyzeGlobal is calling the LLVM API and we will not consider its inner workings. The structure of the GlobalStatus is to dwell, as it contains very important information to optimization passes.

the

/// When we look at a global variable, store some information about her. If we

/// found that there is a capture variable addresses, no information from this structure

/// not be reliable

struct GlobalStatus {

/// True if the address of a global variable is used in comparison operations

bool IsCompared = false;

/// True if a global variable is assigned a value. If not, it

/// can be removed

bool IsLoaded = false;

/// How is the assignment of the value

enum StoredType {

/// No setter. A variable can be marked as const

NotStored,

/// Assignment occurs, but is assigned the same constant with which the variable

/// have been initialized. This is only tracked for scalar types.

InitializerStored,

/// Assignment occurs, but only during initialization and once

/// a different value. If the variable isStoredOnce, we record the value

/// which was rated in the field StoredOnceValue below. This is only checked for the scalar

/// variables.

StoredOnce,

/// Assignment occurs repeatedly or in a manner that

/// we can't trace.

Stored

} StoredType = NotStored;

/// If only one value (except for the initialization constants) recorded

/// into this global variable, the value to be stored here.

Value *StoredOnceValue = nullptr;

...

};It is, perhaps, to explain why "if we found that there is a capture variable addresses, no information of this structure will not be reliable." In fact, if we took the address of a global variable, and then write something to this address, not by name, to trace it will be extremely difficult, and it is better to leave these variables as is, without trying to optimize.

So, we get into the function optimizeOnceStoredGlobal, in the arguments which is passed a variable ( GV) and save the value (StoredOnceVal). Here they are:

the

@Do = internal unnamed_addr global i32 (...)* null, align 8 //variable

i32 (...)* bitcast (i32 ()* @PrintHello to i32 (...)*) // valueFurther, the values are deleted bitcast insignificant, and the variable is checked by the following condition:

the

if (GV- > getInitializer()->getType()->isPointerTy() &&

GV- > getInitializer ()- > isNullValue()) {

...that is, the variable must initializelayout a null pointer. If so, create a new variable, SOVC, corresponding to the value StoredOnceVal given to the type GV:

the

if (Constant *SOVC = dyn_cast<Constant>(StoredOnceVal)) {

if (GV- > getInitializer()->getType() != SOVC- > getType())

SOVC = ConstantExpr::getBitCast(SOVC, GV- > getInitializer()->getType());Here getBitCast method that returns the command bitcast leading types in LLVM IR.

After this function is called OptimizeAwayTrappingUsesOfLoads. It is passed the global variable GV and LV constant.

Directly optimized by the function of OptimizeAwayTrappingUsesOfValue(Value *V, Constant *NewV).

For each use of a variable:

the

for (auto UI = V- > user_begin(), E = V- > user_end(); UI != E; ) {

Instruction *I = cast < Instruction > (*UI++);if it is a Load command, replacing it with the operand with a new value:

the

if (LoadInst *LI = dyn_cast<LoadInst>(I)) {

LI->setOperand(0, NewV);

Changed = true;

}If the variable is used in a function call or invoke (which is what happens in our example), create a new function, replacing its argument with a new value:

the

if (isa < CallInst > (I) || isa < InvokeInst > (I)) {

CallSite CS(I);

if (CS.getCalledValue() == V) {

// Calling through the pointer! Turn into a direct call, but be careful

// that the pointer is not also being passed as an argument.

CS.setCalledFunction(NewV);

Changed = true;

PassedAsArg bool = false;

for (unsigned i = 0, e = CS.arg_size(); i != e; ++i)

if (CS.getArgument(i) == V) {

PassedAsArg = true;

CS.setArgument(i, NewV);

}All other function arguments are just copied.

Have similar replacement algorithms for GEP and Cast instructions, but in our case this is going on.

Further actions are as follows: review all usage of the global variable, trying to remove all but the assignment value. If this is successful, we can remove a variable Do.

So, we briefly reviewed the work of the optimization pass on the LLVM specific example. In principle, nothing complicated here, but requires great accuracy in the programming to anticipate all possible combinations of commands and of variable types. Of course, all this should be covered by tests. Study the source code of the LLVM optimizers will help you to write your optimize improve the code for any specific occasions.

the

Examples of some interesting optimizations in LLVM

Here are a few examples of how LLVM is able to optimize the code. These examples are not relevant to the example we just did, and made in other optimization passes, however, they are quite unusual and interesting.

First example

Consider the code that sums numbers from 1 to n-1:

the

int sum(int n) {

int s = 0;

for(int i = 0; i < n; i++)

s += i;

return s;

}Compiled with -O1:

the

define i32 @sum(i32 %n) local_unnamed_addr #0 {

entry:

%cmp6 = icmp sgt i32 %n, 0

br i1 %cmp6, label %for.cond.cleanup.loopexit, label %for.cond.cleanup

for.cond.cleanup.loopexit: ; preds = %entry

%0 = add i32 %n, -1

%1 = zext i32 %0 to i33

%2 = add i32 %n, -2

%3 = zext i32 %2 to i33

%4 = mul i33 %1, %3

%5 = lshr i33 %4, 1

%6 = trunc i33 %5 to i32

%7 = add i32 %6, %n

%8 = add i32 %7, -1

br label %for.cond.cleanup

for.cond.cleanup: ; preds = %for.cond.cleanup.loopexit, %entry

%s.0.lcssa = phi i32 [ 0, %entry ], [ %8, %for.cond.cleanup.loopexit ]

ret i32 %s.0.lcssa

}Suddenly, no cycle, but there is a wonderful type variables i33 (that is, 33-bit integer). How did this happen? LLVM transformed row sum in the formula: (n-1)*(n-2)/2 + n — 1. As in the calculation of the intermediate variables may overflow a 32-bit mesh LLVM inserted a variable of type i33. Note that he did this by analyzing unoptimized assembler code, but it is quite difficult. Under the spoiler is given neoptimizirovannost code for the same function, which directly believes in a loop:

unoptimized code

define i32 @sum(i32 %n) #0 {

entry:

%n.addr = alloca i32, align 4

%s = alloca i32, align 4

%i = alloca i32, align 4

store i32 %n, i32* %n.addr, align 4

store i32 0, i32* %s, align 4

store i32 0, i32* %i, align 4

br label %for.cond

for.cond: ; preds = %for.inc, %entry

%0 = load i32, i32* %i, align 4

%1 = load i32, i32* %n.addr, align 4

%cmp = icmp slt i32 %0, %1

br i1 %cmp, label %for.body, label %for.end

for.body: ; preds = %for.cond

%2 = load i32, i32* %i, align 4

%3 = load i32, i32* %s, align 4

%add = add nsw i32 %3, %2

store i32 %add, i32* %s, align 4

br label %for.inc

for.inc: ; preds = %for.body

%4 = load i32, i32* %i, align 4

%inc = add nsw i32 %4, 1

store i32 %inc, i32* %i, align 4

br label %for.cond

for.end: ; preds = %for.cond

%5 = load i32, i32* %s, align 4

ret i32 %5

}Even more interesting to see what happens with this example in the backend. Variable i33 is converted to i64, and if your processor is 32-bit, generates a sequence of commands for multiplication and addition 64-bit integers in a 32-bit system. Even more interesting, if the original example to change the data type to long. Then the argument and the return value will be of type i64, and intermediate variables will be the type of i65!

Second example

Suppose we decided to write a function that change the sign of the float, changing the 31-th bit of the binary representation of the float:

the

float sum(float x) {

int val = *((int*) &x);

int inv = val ^ (1 << 31);

return *((float*)&inv);

}When compiling for x86_64, there is nothing particularly interesting:

the

.LCPI0_0:

.long 2147483648 # float -0

.long 2147483648 # float -0

.long 2147483648 # float -0

.long 2147483648 # float -0

.text

.globl sum

.p2align 4, 0x90

.type sum,@function

sum: # @sum

.cfi_startproc

# BB#0: # %entry

xorps .LCPI0_0(%rip), %xmm0

retqBut if we compile for the ARM 64 (AARCH64):

the

invert: // @invert

// BB#0: // %entry

fneg s0, s0

retLLVM recognizes the change in the 31st bit command fneg, izmenenyaya sign float. For comparison, GCC does not know how, giving the "literal" option.

GCC 6.3 (ARM 64):

the

invert(float):

fmov w0, s0

eor w0, w0, -2147483648

fmov s0, w0

retThis is an example of target-specific optimization, which is done in the backend and not in the utility opt.

For this example, we want to say a few words. Such actions with pointers break the rule of strict aliasing, which can lead to neopredelennogo behavior when compiling with-strict-aliasing some compilers on some platforms (in fact, vanishingly small number of cases). For this example, the error occurs when compiling with gcc4.4.7 -m32 -O2, and disappears in more recent versions of gcc. However, I put in the reference list at the end of a link to an interesting lecture on aliasing.

Third example

Another example of target-specific optimization, this time to x86-64, and more specifically, to architecture Haswell.

Write a function count one bits in word:

the

int cntSetBits(int a) {

int cnt = 0;

while(a)

{

cnt++;

a &= (a-1);

}

return cnt;

}Compiled with -O1 -march=haswell:

the

cntSetBits(int): # @cntSetBits(int)

popcnt eax, edi

retThe whole function fits into a single popcnt instruction, which counts the number of ones in the word.

Look at IR

the

; Function Attrs: nounwind readnone uwtable norecurse

define i32 @cntSetBits(i32 %a) local_unnamed_addr #0 {

entry:

%0 = call i32 @llvm.ctpop.i32(i32 %a)!range !2

ret i32 %0

}Here we see that you are using the built-in function llvm.ctpop.i32. Put already front-end using high-level information about the code and the backend for this architecture knows about this function and can replace it with a special command.

the

Useful links

http://www.drdobbs.com/architecture-and-design/the-design-of-llvm/240001128 — Chris Lattner, "The Design of LLVM"

https://youtu.be/bSkpMdDe4g4 — Matt Godbolt, "What has my compiler done for me lately?"

https://youtu.be/ACW-7dktyDk Dmitry Kashitsyn "Trolley from loaf: aliasing and vectorization in LLVM"

Комментарии

Отправить комментарий